Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

This document was translated from Chinese by AI and has not yet been reviewed.

The Agents page is an assistant marketplace where you can select or search for desired model presets. Click on a card to add the assistant to your conversation page's assistant list.

You can also edit and create your own assistants on this page.

Click My, then Create Agent to start building your own assistant.

This document was translated from Chinese by AI and has not yet been reviewed.

For knowledge base usage, please refer to the Knowledge Base Tutorial in the advanced tutorials.

This document was translated from Chinese by AI and has not yet been reviewed.

When the assistant does not have a default assistant model configured, the model selected by default for new conversations is the one set here. The model used for prompt optimization and word-selection assistant is also configured in this section.

After each conversation, a model is called to generate a topic name for the dialog. The model set here is the one used for naming.

The translation feature in input boxes for conversations, painting, etc., and the translation model in the translation interface all use the model set here.

The model used for the quick assistant feature. For details, see Quick Assistant.

This document was translated from Chinese by AI and has not yet been reviewed.

Key translation notes:

Preserved all Markdown formatting (headings, checkboxes)

Technical terms remain unchanged: "JavaScript", "SSO", "iOS", "Android"

Action descriptions standardized: "Quick Pop-up" for 快捷弹窗, "multi-model" for 多模型

Functional translations: "划词翻译" → "text selection translation"

Feature localization: "AI 通话" → "AI calls"

Maintained present tense for consistency

Preserved special characters and list formatting

Translated bracket content while keeping technical references (JavaScript)

Proper noun capitalization: "AI Notes"

This document was translated from Chinese by AI and has not yet been reviewed.

This document was translated from Chinese by AI and has not yet been reviewed.

Welcome to Cherry Studio (hereinafter referred to as "this software" or "we"). We highly value your privacy protection, and this Privacy Policy explains how we handle and protect your personal information and data. Please read and understand this agreement carefully before using this software:

To optimize user experience and improve software quality, we may only collect the following anonymous non-personal information: • Software version information; • Function activity and usage frequency; • Anonymous crash and error log information;

The above information is completely anonymous, does not involve any personally identifiable data, and cannot be associated with your personal information.

To maximize the protection of your privacy, we explicitly commit: • Will not collect, store, transmit, or process the model service API Key information you input into this software; • Will not collect, store, transmit, or process any conversation data generated during your use of this software, including but not limited to chat content, instruction information, knowledge base information, vector data, and other custom content; • Will not collect, store, transmit, or process any personally identifiable sensitive information.

This software uses the API Key of third-party model service providers that you apply for and configure yourself to complete model invocation and conversation functions. The model services you use (such as large language models, API interfaces, etc.) are provided and fully managed by the third-party provider you choose, and Cherry Studio only serves as a local tool providing interface invocation functionality with third-party model services.

Therefore: • All conversation data generated between you and the model service is unrelated to Cherry Studio. We neither participate in data storage nor perform any form of data transmission or relay; • You need to independently review and accept the privacy policies and relevant regulations of the corresponding third-party model service providers. These services' privacy policies can be viewed on each provider's official website.

You shall bear any privacy risks that may arise from using third-party model service providers. For specific privacy policies, data security measures, and related responsibilities, please refer to the relevant content on the official websites of your chosen model service providers. We assume no responsibility for these matters.

This agreement may be appropriately adjusted with software version updates. Please check it periodically. When substantive changes occur to the agreement, we will notify you through appropriate means.

If you have any questions regarding this agreement or Cherry Studio's privacy protection measures, please feel free to contact us at any time.

Thank you for choosing and trusting Cherry Studio. We will continue to provide you with a secure and reliable product experience.

This document was translated from Chinese by AI and has not yet been reviewed.

Quick Assistant is a convenient tool provided by Cherry Studio that allows you to quickly access AI functions in any application, enabling instant operations like asking questions, translation, summarization, and explanations.

Open Settings: Navigate to Settings -> Shortcuts -> Quick Assistant.

Enable Switch: Find and toggle on the Quick Assistant button.

Set Shortcut Key (Optional):

Default shortcut for Windows: Ctrl + E

Default shortcut for macOS: ⌘ + E

Customize your shortcut here to avoid conflicts or match your usage habits.

Activate: Press your configured shortcut key (or default shortcut) in any application to open Quick Assistant.

Interact: Within the Quick Assistant window, you can directly perform:

Quick Questions: Ask any question to the AI.

Text Translation: Input text to be translated.

Content Summarization: Input long text for summarization.

Explanation: Input concepts or terms requiring explanation.

Close: Press ESC or click anywhere outside the Quick Assistant window.

Shortcut Conflicts: Modify shortcuts if defaults conflict with other applications.

Explore More Functions: Beyond documented features, Quick Assistant may support operations like code generation and style transfer. Continuously explore during usage.

Feedback & Improvements: Report issues or suggestions to the Cherry Studio team via feedback.

This document was translated from Chinese by AI and has not yet been reviewed.

On this page, you can set the software's color theme, page layout, or customize CSS for personalized settings.

Here you can set the default interface color mode (light mode, dark mode, or follow system).

These settings are for the layout of the chat interface.

When this setting is enabled, clicking on the assistant's name will automatically switch to the corresponding topic page.

When enabled, the creation time of the topic will be displayed below it.

This setting allows flexible customization of the interface. For specific methods, please refer to Custom CSS in the advanced tutorial.

Windows 版本安装教程

This document was translated from Chinese by AI and has not yet been reviewed.

Note: Windows 7 system does not support Cherry Studio installation.

Click download to select the appropriate version

If the browser prompts that the file is not trusted, simply choose to keep it.

Choose to Keep→Trust Cherry-Studio

This document was translated from Chinese by AI and has not yet been reviewed.

The drawing feature currently supports painting models from DMXAPI, TokenFlux, AiHubMix, and . You can register an account at and to use this feature.

For questions about parameters, hover your mouse over the ? icon in corresponding areas to view descriptions.

This document was translated from Chinese by AI and has not yet been reviewed.

This page only introduces the interface functions. For configuration tutorials, please refer to the tutorial in the basic tutorials.

In Cherry Studio, a single service provider supports multi-key round-robin usage, where the keys are rotated from first to last in a list.

Add multiple keys separated by English commas, as shown in the example below:

You must use an English comma.

When using built-in service providers, you generally do not need to fill in the API address. If you need to modify it, please strictly follow the address provided in the official documentation.

If the address provided by the service provider is in the format https://xxx.xxx.com/v1/chat/completions, you only need to fill in the root address part (https://xxx.xxx.com).

Cherry Studio will automatically concatenate the remaining path (/v1/chat/completions). Not filling it as required may lead to improper functionality.

Typically, clicking the Manage button in the bottom left corner of the service provider configuration page will automatically fetch all models supported by that service provider. You can then click the + sign from the fetched list to add them to the model list.

Click the check button next to the API Key input box to test if the configuration is successful.

After successful configuration, make sure to turn on the switch in the upper right corner, otherwise, the service provider will remain disabled, and the corresponding models will not be found in the model list.

macOS 版本安装教程

This document was translated from Chinese by AI and has not yet been reviewed.

First, visit the official download page to download the Mac version, or click the direct link below

Please download the chip-specific version matching your Mac

After downloading, click here

Drag the icon to install

Find the Cherry Studio icon in Launchpad and click it. If the Cherry Studio main interface opens, the installation is successful.

This document was translated from Chinese by AI and has not yet been reviewed.

Log in to . If you don't have an Alibaba Cloud account, you'll need to register.

Click the Create My API-KEY button in the upper-right corner.

In the popup window, select the default workspace (or customize it if desired). You can optionally add a description.

Click the Confirm button in the lower-right corner.

You should now see a new entry in the list. Click the View button on the right.

Click the Copy button.

Go to Cherry Studio, navigate to Settings → Model Services → Alibaba Cloud Bailian, and paste the copied API key into the API Key field.

You can adjust related settings as described in , then start using the service.

This document was translated from Chinese by AI and has not yet been reviewed.

Log in and navigate to the tokens page

Create a new token (you can also directly use the default token ↑)

Copy the token

Open CherryStudio's service provider settings and click Add at the bottom of the provider list

Enter a note name, select OpenAI as the provider, and click OK

Paste the key you just copied

Return to the API Key page, copy the root address from the browser's address bar, for example:

Add models (click Manage to auto-fetch or enter manually) and toggle the switch in the top right corner to start using.

The interface may differ in other OneAPI themes, but the addition method follows the same workflow as above.

This document was translated from Chinese by AI and has not yet been reviewed.

1.2 Click the settings at the bottom left, and select 【SiliconFlow】 in the model service

1.2 Click the link to get SiliconCloud API Key

Log in to (if not registered, the first login will automatically register an account)

Visit to create a new key or copy an existing one

1.3 Click Manage to add models

Click the "Chat" button in the left menu bar

Enter text in the input box to start chatting

You can switch models by selecting the model name in the top menu

This document was translated from Chinese by AI and has not yet been reviewed.

To use GitHub Copilot, you must first have a GitHub account and subscribe to the GitHub Copilot service. The free subscription tier is acceptable, but note that it does not support the latest Claude 3.7 model. For details, refer to the .

Click "Log in with GitHub" to generate and copy your Device Code.

After obtaining your Device Code, click the link to open your browser. Log in to your GitHub account, enter the Device Code, and grant authorization.

After successful authorization, return to Cherry Studio and click "Connect GitHub". Your GitHub username and avatar will appear upon successful connection.

Click the "Manage" button below, which will automatically fetch the currently supported models list online.

The current implementation uses Axios for network requests. Note that Axios does not support SOCKS proxies. Please use a system proxy or HTTP proxy, or avoid setting proxies within CherryStudio and use a global proxy instead. First, ensure your network connection is stable to prevent Device Code retrieval failures.

This document was translated from Chinese by AI and has not yet been reviewed.

Use this method to clear CSS settings when incorrect CSS has been applied, or when you cannot access the settings interface after applying CSS.

Open the console by clicking on the CherryStudio window and pressing Ctrl+Shift+I (MacOS: command+option+I).

In the opened console window, click Console

Then manually enter document.getElementById('user-defined-custom-css').remove(). Copying and pasting will most likely not work.

After entering, press Enter to confirm and clear the CSS settings. Then, go back to CherryStudio's display settings and remove the problematic CSS code.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio data backup supports backup via S3 compatible storage (object storage). Common S3 compatible storage services include: AWS S3, Cloudflare R2, Alibaba Cloud OSS, Tencent Cloud COS, and MinIO.

Based on S3 compatible storage, multi-terminal data synchronization can be achieved by the method of Computer A S3 Storage Computer B.

Create an object storage bucket, and record the bucket name. It is highly recommended to set the bucket to private read/write to prevent backup data leakage!!

Refer to the documentation, go to the cloud service console to obtain information such as Access Key ID, Secret Access Key, Endpoint, Bucket, Region for S3 compatible storage.

Endpoint: The access address for S3 compatible storage, usually in the form of https://<bucket-name>.<region>.amazonaws.com or https://<ACCOUNT_ID>.r2.cloudflarestorage.com.

Region: The region where the bucket is located, such as us-west-1, ap-southeast-1, etc. For Cloudflare R2, please fill in auto.

Bucket: The bucket name.

Access Key ID and Secret Access Key: Credentials used for authentication.

Root Path: Optional, specifies the root path when backing up to the bucket, default is empty.

Related Documentation

AWS S3:

Cloudflare R2:

Alibaba Cloud OSS:

Tencent Cloud COS:

Fill in the above information in the S3 backup settings, click the backup button to perform backup, and click the manage button to view and manage the list of backup files.

This document was translated from Chinese by AI and has not yet been reviewed.

MCP (Model Context Protocol) is an open-source protocol designed to provide context information to Large Language Models (LLMs) in a standardized way. For more about MCP, see .

Below, we'll use the fetch function as an example to demonstrate how to use MCP in Cherry Studio. Details can be found in the .

Cherry Studio currently only uses its built-in and and will not reuse uv and bun already installed on your system.

In Settings - MCP Server, click the Install button to automatically download and install. Since it's downloaded directly from GitHub, the speed might be slow, and there's a high chance of failure. The success of the installation is determined by whether there are files in the folder mentioned below.

Executable installation directory:

Windows: C:\Users\username\.cherrystudio\bin

macOS, Linux: ~/.cherrystudio/bin

If installation fails:

You can symlink the corresponding commands from your system here. If the directory does not exist, you need to create it manually. Alternatively, you can manually download the executable files and place them in this directory:

Bun: UV:

This document was translated from Chinese by AI and has not yet been reviewed.

Automatically install MCP service (Beta)

Persistence memory base implementation based on local knowledge graph. This enables models to remember user-related information across different conversations.

An MCP server implementation providing tools for dynamic and reflective problem-solving through structured thought processes.

An MCP server implementation integrated with Brave Search API, offering dual functionality for both web and local searches.

MCP server for retrieving web content from URLs.

Node.js server implementing the Model Context Protocol (MCP) for file system operations.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio supports two ways to configure blacklists: manually and by adding subscription sources. For configuration rules, refer to

You can add rules for search results or click the toolbar icon to block specific websites. Rules can be specified using (e.g., *://*.example.com/*) or (e.g., /example\.(net|org)/).

You can also subscribe to public rule sets. This website lists some subscriptions: https://iorate.github.io/ublacklist/subscriptions

Here are some recommended subscription source links:

MEMORY_FILE_PATH=/path/to/your/file.jsonBRAVE_API_KEY=YOUR_API_KEY

https://git.io/ublacklist

Chinese

https://raw.githubusercontent.com/laylavish/uBlockOrigin-HUGE-AI-Blocklist/main/list_uBlacklist.txt

AI Generated

sk-xxxx1,sk-xxxx2,sk-xxxx3,sk-xxxx4

This document was translated from Chinese by AI and has not yet been reviewed.

This interface allows for local and cloud data backup and recovery, local data directory inquiry and cache clearing, export settings, and third-party connections.

Currently, data backup supports three methods: local backup, WebDAV backup, and S3-compatible storage (object storage) backup. For specific introductions and tutorials, please refer to the following documents:

Export settings allow you to configure the export options displayed in the export menu, as well as set the default path for Markdown exports, display styles, and more.

Third-party connections allow you to configure Cherry Studio's connection with third-party applications for quickly exporting conversation content to your familiar knowledge management applications. Currently supported applications include: Notion, Obsidian, SiYuan Note, Yuque, Joplin. For specific configuration tutorials, please refer to the following documents:

This document was translated from Chinese by AI and has not yet been reviewed.

We welcome contributions to Cherry Studio! You can contribute in the following ways:

Contribute code: Develop new features or optimize existing code.

Fix bugs: Submit bug fixes you discover.

Maintain issues: Help manage GitHub issues.

Product design: Participate in design discussions.

Write documentation: Improve user manuals and guides.

Community engagement: Join discussions and assist users.

Promote usage: Spread the word about Cherry Studio.

Email [email protected]

Email subject: Application to Become a Developer Email content: Reason for Application

This document was translated from Chinese by AI and has not yet been reviewed.

Email [email protected] to obtain editing privileges

Subject: Request for Cherry Studio Docs Editing Privileges

Body: State your reason for applying

This document was translated from Chinese by AI and has not yet been reviewed.

Follow our social accounts: Twitter (X), Xiaohongshu, Weibo, Bilibili, Douyin

Join our communities: QQ Group (575014769), Telegram, Discord, WeChat Group (Click to view)

Cherry Studio is an all-in-one AI assistant platform integrating multi-model conversations, knowledge base management, AI painting, translation, and more. Cherry Studio's highly customizable design, powerful extensibility, and user-friendly experience make it an ideal choice for both professional users and AI enthusiasts. Whether you are a beginner or a developer, you can find suitable AI features in Cherry Studio to enhance your work efficiency and creativity.

Multi-Model Responses: Supports generating replies to the same question simultaneously through multiple models, allowing users to compare the performance of different models. See Chat Interface for details.

Automatic Grouping: Chat records for each assistant are automatically grouped and managed, making it easy for users to quickly find historical conversations.

Chat Export: Supports exporting full or partial conversations into various formats (e.g., Markdown, Word), facilitating storage and sharing.

Highly Customizable Parameters: In addition to basic parameter adjustments, it also supports users filling in custom parameters to meet personalized needs.

Assistant Marketplace: Built-in with over a thousand industry-specific assistants covering translation, programming, writing, and other fields, while also supporting user-defined assistants.

Multi-Format Rendering: Supports Markdown rendering, formula rendering, real-time HTML preview, and other features to enhance content display.

AI Painting: Provides a dedicated drawing panel, allowing users to generate high-quality images through natural language descriptions.

AI Mini-Apps: Integrates various free web-based AI tools, allowing direct use without switching browsers.

Translation Feature: Supports dedicated translation panel, chat translation, prompt translation, and various other translation scenarios.

File Management: Files within chats, paintings, and knowledge bases are uniformly categorized and managed to avoid tedious searching.

Global Search: Supports quick location of historical records and knowledge base content, improving work efficiency.

Service Provider Model Aggregation: Supports unified invocation of models from mainstream service providers such as OpenAI, Gemini, Anthropic, and Azure.

Automatic Model Retrieval: One-click retrieval of the complete model list, no manual configuration required.

Multiple API Key Rotation: Supports rotating multiple API keys to avoid rate limit issues.

Accurate Avatar Matching: Automatically matches exclusive avatars for each model to enhance recognition.

Custom Service Providers: Supports the integration of third-party service providers compliant with OpenAI, Gemini, Anthropic, etc., offering strong compatibility.

Custom CSS: Supports global style customization to create a unique interface style.

Custom Chat Layout: Supports list or bubble style layouts, and allows customizing message styles (e.g., code snippet styles).

Custom Avatars: Supports setting personalized avatars for the software and assistants.

Custom Sidebar Menu: Users can hide or reorder sidebar functions according to their needs to optimize the user experience.

Multi-Format Support: Supports importing various file formats such as PDF, DOCX, PPTX, XLSX, TXT, and MD.

Multiple Data Source Support: Supports local files, URLs, sitemaps, and even manual input content as knowledge base sources.

Knowledge Base Export: Supports exporting processed knowledge bases for sharing with others.

Search Verification Support: After importing the knowledge base, users can perform real-time retrieval tests to view processing results and segmentation effects.

Quick Q&A: Summon the quick assistant in any scenario (e.g., WeChat, browser) to quickly get answers.

Quick Translate: Supports quick translation of words or text in other scenarios.

Content Summary: Quickly summarizes long text content, improving information extraction efficiency.

Explanation: Explains confusing questions with one click, no complex prompts required.

Multiple Backup Solutions: Supports local backup, WebDAV backup, and scheduled backup to ensure data security.

Data Security: Supports full local usage scenarios, combined with local large models, to avoid data leakage risks.

Beginner-Friendly: Cherry Studio is committed to lowering technical barriers, allowing users with no prior experience to get started quickly and focus on work, study, or creation.

Comprehensive Documentation: Provides detailed user documentation and a FAQ handbook to help users quickly resolve issues.

Continuous Iteration: The project team actively responds to user feedback and continuously optimizes features to ensure the healthy development of the project.

Open Source and Extensibility: Supports users in customizing and extending through open-source code to meet personalized needs.

Knowledge Management and Query: Quickly build and query exclusive knowledge bases through the local knowledge base feature, suitable for research, education, and other fields.

Multi-Model Chat and Creation: Supports simultaneous multi-model conversations, helping users quickly obtain information or generate content.

Translation and Office Automation: Built-in translation assistant and file processing features, suitable for users needing cross-language communication or document processing.

AI Painting and Design: Generate images through natural language descriptions to meet creative design needs.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio's translation feature provides you with fast and accurate text translation services, supporting mutual translation between multiple languages.

The translation interface mainly consists of the following components:

Source Language Selection Area:

Any Language: Cherry Studio will automatically identify the source language and perform translation.

Target Language Selection Area:

Dropdown Menu: Select the language you wish to translate the text into.

Settings Button:

Clicking will jump to Default Model Settings.

Scroll Synchronization:

Toggle to enable scroll sync (scrolling in either side will synchronize the other).

Text Input Box (Left):

Input or paste the text you need to translate.

Translation Result Box (Right):

Displays the translated text.

Copy Button: Click to copy the translation result to clipboard.

Translate Button:

Click this button to start translation.

Translation History (Top Left):

Click to view translation history records.

Select Target Language:

Choose your desired translation language in the Target Language Selection Area.

Input or Paste Text:

Enter or paste the text to be translated in the left text input box.

Start Translation:

Click the Translate button.

View and Copy Results:

Translation results will appear in the right result box.

Click the copy button to save the result to clipboard.

Q: What to do about inaccurate translations?

A: While AI translation is powerful, it's not perfect. For professional fields or complex contexts, manual proofreading is recommended. You may also try switching different models.

Q: Which languages are supported?

A: Cherry Studio translation supports multiple major languages. Refer to Cherry Studio's official website or in-app instructions for the specific supported languages list.

Q: Can entire files be translated?

A: The current interface primarily handles text translation. For document translation, please use Cherry Studio's conversation page to add files for translation.

Q: How to handle slow translation speeds?

A: Translation speed may be affected by network connection, text length, or server load. Ensure stable network connectivity and be patient.

暂时不支持Claude模型

This document was translated from Chinese by AI and has not yet been reviewed.

Before obtaining a Gemini API Key, you need to have a Google Cloud project (if you already have one, you can skip this step)

Go to Google Cloud to create a project, fill in the project name, and click "Create Project"

Go to the Vertex AI console

Enable the Vertex AI API in the created project

Open the Service Accounts permissions interface and create a service account

On the service account management page, find the service account you just created, click on Keys and create a new JSON format key

After successful creation, the key file will be automatically saved to your computer in JSON format. Please keep it safe

Select Vertex AI as the service provider

Fill in the corresponding fields from the JSON file

Click "Add Model" to start using it happily!

This document was translated from Chinese by AI and has not yet been reviewed.

Create an account and log in at Huawei Cloud

Click this link to enter the MaaS control panel

Authorization

Click Authentication Management in the sidebar, create an API Key (secret key) and copy it

Then create a new service provider in CherryStudio

After creation, enter the secret key

Click Model Deployment in the sidebar, claim all offerings

Click Call

Copy the address from ①, paste it into CherryStudio's service provider address field and add a "#" at the end and add a "#" at the end and add a "#" at the end and add a "#" at the end and add a "#" at the end Why add "#"? [see here](https://docs.cherry-ai.com/cherrystudio/preview/settings/providers#api-di-zhi) > You can choose not to read that and just follow this tutorial; > Alternatively, you can fill it by removing v1/chat/completions - feel free to use your own method if you know how, but if not, strictly follow this tutorial.

Then copy the model name from ②, and click the "+ Add" button in CherryStudio to create a new model

Enter the model name exactly as shown - do not add or remove anything, and don't include quotes. Copy exactly as in the example.

Click the Add Model button to complete.

This document was translated from Chinese by AI and has not yet been reviewed.

On the official API Key page, click + Create new secret key

Copy the generated key and open CherryStudio's Service Provider Settings

Find the service provider OpenAI and enter the key you just obtained.

Click "Manage" or "Add" at the bottom to add supported models, and then turn on the service provider switch in the top right corner to start using it.

This document was translated from Chinese by AI and has not yet been reviewed.

Have you ever experienced: saving 26 insightful articles in WeChat that you never open again, storing over 10 scattered files in a "Study Materials" folder on your computer, or trying to recall a theory you read half a year ago only to remember fragmented keywords? And when daily information exceeds your brain's processing limit, 90% of valuable knowledge gets forgotten within 72 hours. Now, by leveraging the Infini-AI large model service platform API + Cherry Studio to build a personal knowledge base, you can transform dust-collecting WeChat articles and fragmented course content into structured knowledge for precise retrieval.

1. Infini-AI API Service: The Stable "Thinking Hub" of Knowledge Bases

Serving as the "thinking hub" of knowledge bases, the Infini-AI large model service platform offers robust API services including full-capacity DeepSeek R1 and other model versions. Currently free to use without barriers after registration. Supports mainstream embedding models (bge, jina) for knowledge base construction. The platform continuously updates with the latest, most powerful open-source models, covering multiple modalities like images, videos, and audio.

2. Cherry Studio: Zero-Code Knowledge Base Setup

Compared to RAG knowledge base development requiring 1-2 months deployment time, Cherry Studio offers a significant advantage: zero-code operation. Instantly import multiple formats like Markdown/PDF/web pages – parsing 40MB files in 1 minute. Easily add local folders, saved WeChat articles, and course notes.

Step 1: Basic Preparation

Download the suitable version from Cherry Studio official site (https://cherry-ai.com/)

Register account: Log in to Infini-AI platform (https://cloud.infini-ai.com/genstudio/model?cherrystudio)

Get API key: Select "deepseek-r1" in Model Square, create and copy the API key + model name

Step 2: CherryStudio Settings

Go to model services → Select Infini-AI → Enter API key → Activate Infini-AI service

After setup, select the large model during interaction to use Infini-AI API in CherryStudio. Optional: Set as default model for convenience

Step 3: Add Knowledge Base

Choose any version of bge-series or jina-series embedding models from Infini-AI platform

After importing study materials, query: "Outline core formula derivations in Chapter 3 of 'Machine Learning'"

Result Demonstration

This document was translated from Chinese by AI and has not yet been reviewed.

Log in and open the token page

Click "Add Token"

Enter a token name and click Submit (configure other settings if needed)

Open CherryStudio's provider settings and click Add at the bottom of the provider list

Enter a remark name, select OpenAI as the provider, and click OK

Paste the key you just copied

Return to the API Key acquisition page and copy the root address from the browser's address bar, for example:

Add models (click Manage to automatically fetch or manually enter) and toggle the switch at the top right to use.

如何注册tavily?

This document was translated from Chinese by AI and has not yet been reviewed.

Visit the official website above, or go to Cherry Studio > Settings > Web Search > click "Get API Key" to directly access Tavily's login/registration page.

First-time users must register (Sign up) before logging in (Log in). The default page is the login interface.

Click "Sign up" and enter your email (or use Google/GitHub account) followed by your password.

🚨🚨🚨[Critical Step] After registration, a dynamic verification code is required. Scan the QR code to generate a one-time code.

Two solutions:

Download Microsoft Authenticator app (slightly complex)

Use WeChat Mini Program: Tencent Authenticator (recommended, as simple as it gets).

Search "Tencent Authenticator" in WeChat Mini Programs:

After completing these steps, you'll see the dashboard. Copy the API key to Cherry Studio to start using Tavily!

This document was translated from Chinese by AI and has not yet been reviewed.

Supports exporting topics and messages to SiYuan Note.

Open SiYuan Note and create a notebook

Open notebook settings and copy the Notebook ID

Paste the copied notebook ID into Cherry Studio settings

Fill in the SiYuan Note address

Local

Typically http://127.0.0.1:6806

Self-hosted

Use your domain http://note.domain.com

Copy the SiYuan Note API Token

Paste it into Cherry Studio settings and check

Congratulations, the configuration for SiYuan Note is complete ✅ Now you can export content from Cherry Studio to your SiYuan Note

This document was translated from Chinese by AI and has not yet been reviewed.

Automatic installation of MCP requires upgrading Cherry Studio to v1.1.18 or higher.

In addition to manual installation, Cherry Studio has a built-in @mcpmarket/mcp-auto-install tool that provides a more convenient way to install MCP servers. You simply need to enter specific commands in conversations with large models that support MCP services.

Beta Phase Reminder:

@mcpmarket/mcp-auto-install is currently in beta phase

Effectiveness depends on the "intelligence" of the large model - some configurations are automatically added, while others still require manual parameter adjustments in MCP settings

Current search sources are from @modelcontextprotocol, but this can be customized (explained below)

For example, you can input:

Help me install a filesystem mcp serverThe system will automatically recognize your requirements and complete the installation via @mcpmarket/mcp-auto-install. This tool supports various types of MCP servers, including but not limited to:

filesystem (file system)

fetch (network requests)

sqlite (database)

etc.

The MCP_PACKAGE_SCOPES variable allows customization of MCP service search sources. Default value:

@modelcontextprotocol.

@mcpmarket/mcp-auto-install LibraryThis document was translated from Chinese by AI and has not yet been reviewed.

Data added to the Cherry Studio knowledge base is entirely stored locally. During the addition process, a copy of the document will be placed in the Cherry Studio data storage directory.

Vector Database: https://turso.tech/libsql

After documents are added to the Cherry Studio knowledge base, they are segmented into multiple fragments. These fragments are then processed by the embedding model.

When using large models for Q&A, relevant text fragments matching the query will be retrieved and processed together by the large language model.

If you have data privacy requirements, it is recommended to use local embedding databases and local large language models.

This document was translated from Chinese by AI and has not yet been reviewed.

Dify Knowledge Base MCP requires upgrading Cherry Studio to v1.2.9 or higher.

Open Search MCP.

Add the dify-knowledge server.

Requires configuring parameters and environment variables

Dify Knowledge Base key can be obtained in the following way:

This document was translated from Chinese by AI and has not yet been reviewed.

Knowledge base document preprocessing requires upgrading Cherry Studio to v1.4.8 or higher.

After clicking 'Get API KEY', the application address will open in your browser. Click 'Apply Now' to fill out the form and obtain the API KEY, then fill it into the API KEY field.

Configure as shown above in the created knowledge base to complete the knowledge base document preprocessing configuration.

You can check knowledge base results by searching in the top right corner.

Knowledge Base Usage Tips: When using more capable models, you can change the knowledge base search mode to intent recognition. Intent recognition can describe your questions more accurately and broadly.

This document was translated from Chinese by AI and has not yet been reviewed.

Contact Person: Mr. Wang 📮: [email protected] 📱: 18954281942 (Not a customer service hotline)

For usage inquiries: • Join our user community at the bottom of the official website homepage • Email [email protected] • Or submit issues: https://github.com/CherryHQ/cherry-studio/issues

For additional guidance, join our Knowledge Planet

Commercial license details: https://docs.cherry-ai.com/contact-us/questions/cherrystudio-xu-ke-xie-yi

This document was translated from Chinese by AI and has not yet been reviewed.

Before obtaining the Gemini API key, you need a Google Cloud project (skip this step if you already have one).

Go to to create a project, fill in the project name, and click "Create Project".

On the official , click Create API Key.

Copy the generated key, then open CherryStudio's .

Find the Gemini service provider and enter the key you just obtained.

Click "Manage" or "Add" at the bottom, add the supported models, then toggle the service provider switch at the top right to start using.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio supports importing topics into Notion databases.

Visit to create an application

Create an application

Name: Cherry Studio Type: Select the first option Icon: You can save this image

Copy the secret key and enter it in Cherry Studio settings

Open website and create a new page. Select database type below, name it Cherry Studio, and connect as shown

If your Notion database URL looks like this:

https://www.notion.so/<long_hash_1>?v=<long_hash_2>

Then the Notion database ID is the part <long_hash_1>

Fill in Page Title Field Name:

If your interface is in English, enter Name

If your interface is in Chinese, enter 名称

Congratulations! Notion configuration is complete ✅ You can now export Cherry Studio content to your Notion database

This document was translated from Chinese by AI and has not yet been reviewed.

Log in to

Or click

Click in the sidebar

Create an API Key

After creation, click the eye icon next to the created API Key to reveal and copy it

Paste the copied API Key into Cherry Studio and then toggle the provider switch to ON

Enable the models you need at the bottom of the sidebar in the Ark console under . You can enable the Doubao series, DeepSeek, and other models as required

In the , find the model ID corresponding to the desired model

Open Cherry Studio's settings and locate Volcano Engine

Click Add, then paste the previously obtained model ID into the model ID text field

Follow this process to add models one by one

There are two ways to write the API address:

First, the default in the client: https://ark.cn-beijing.volces.com/api/v3/

Second: https://ark.cn-beijing.volces.com/api/v3/chat/completions#

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio data backup supports WebDAV for backup. You can choose a suitable WebDAV service for cloud backup.

Based on WebDAV, you can achieve multi-device data synchronization through Computer A WebDAV Computer B.

Log in to Jianguoyun, click your username in the top right corner, and select "Account Info":

Select "Security Options" and click "Add Application":

Enter the application name and generate a random password;

Copy and record the password;

Get the server address, account, and password;

In Cherry Studio Settings -> Data Settings, fill in the WebDAV information;

Choose to back up or restore data, and you can set the automatic backup time period.

WebDAV services with a lower barrier to entry are generally cloud drives:

(requires membership)

(requires purchase)

(Free storage capacity is 10GB, single file size limit is 250MB.)

(Dropbox offers 2GB free, can expand by 16GB by inviting friends.)

(Free space is 10GB, an additional 5GB can be obtained through invitation.)

(Free users get 10GB capacity.)

Secondly, there are some services that require self-deployment:

This document was translated from Chinese by AI and has not yet been reviewed.

Open Cherry Studio settings.

Find the MCP Server option.

Click Add Server.

Fill in the relevant parameters for the MCP Server (). Content that may need to be filled in includes:

Name: Customize a name, for example fetch-server

Type: Select STDIO

Command: Enter uvx

Arguments: Enter mcp-server-fetch

(There may be other parameters, depending on the specific Server)

Click Save.

After completing the above configuration, Cherry Studio will automatically download the required MCP Server - fetch server. Once the download is complete, we can start using it! Note: If mcp-server-fetch fails to configure, try restarting your computer.

Successfully added the MCP Server in the MCP Server settings.

As seen from the image above, by integrating MCP's fetch capability, Cherry Studio can better understand user query intent, retrieve relevant information from the web, and provide more accurate and comprehensive answers.

This document was translated from Chinese by AI and has not yet been reviewed.

ModelScope MCP Server requires Cherry Studio to be upgraded to v1.2.9 or higher.

In version v1.2.9, Cherry Studio and ModelScope reached an official collaboration, greatly simplifying the steps for adding MCP servers, avoiding configuration errors, and allowing you to discover a vast number of MCP servers in the ModelScope community. Follow the steps below to see how to sync ModelScope's MCP servers in Cherry Studio.

Click on MCP Server Settings in Settings, then select Sync Servers.

Select ModelScope and browse to discover MCP services.

Register and log in to ModelScope, then view MCP service details;

In the MCP service details, select connect to service;

Click "Get API Token" in Cherry Studio to jump to the ModelScope official website, copy the API token, and then paste it back into Cherry Studio.

In Cherry Studio's MCP server list, you can see the ModelScope connected MCP services and invoke them in conversations.

For newly connected MCP servers on the ModelScope website in the future, simply click Sync Servers to add them incrementally.

Through the steps above, you have successfully mastered how to conveniently sync MCP servers from ModelScope in Cherry Studio. The entire configuration process is not only greatly simplified, effectively avoiding the tediousness and potential errors of manual configuration, but also allows you to easily access the vast MCP server resources provided by the ModelScope community.

Start exploring and using these powerful MCP services to bring more convenience and possibilities to your Cherry Studio experience!

This document was translated from Chinese by AI and has not yet been reviewed.

Join the Telegram discussion group for assistance:

GitHub Issues:

Email the developers: [email protected]

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio is a free and open-source project. As the project grows, the workload of the team increases significantly. To reduce communication costs and efficiently resolve your issues, we encourage everyone to follow the steps below when reporting problems. This will allow our team to dedicate more time to project maintenance and development. Thank you for your cooperation!

Most basic problems can be resolved by carefully reviewing the documentation:

Functionality and usage questions can be answered in the documentation;

High-frequency issues are compiled on the page—check there first for solutions;

Complex questions can often be resolved through search or using the search bar;

Carefully read all hint boxes in documents to avoid many common issues;

Check existing solutions in the GitHub .

For model-related issues unrelated to client functionality (e.g., model errors, unexpected responses, parameter settings):

Search online for solutions first;

Provide error messages to AI assistants for resolution suggestions.

If steps 1-2 don't solve your problem:

Seek help in our official , , or () When reporting:

For model errors:

Provide full screenshots with error messages visible

Include console errors ()

Sensitive information can be redacted, but keep model names, parameters, and error details

For software bugs:

Give detailed error descriptions

Provide precise reproduction

Include OS (Windows/Mac/Linux) and software version number

For intermittent issues, describe scenarios and configurations comprehensively

Request Documentation or Suggest Improvements

Contact via Telegram @Wangmouuu, QQ (1355873789), or email [email protected].

Monaspace

English Font Commercial Use

GitHub has launched an open-source font family called Monaspace, featuring five styles: Neon (modern style), Argon (humanist style), Xenon (serif style), Radon (handwritten style), and Krypton (mechanical style).

MiSans Global

Multilingual Commercial Use

MiSans Global is a global language font customization project led by Xiaomi, created in collaboration with Monotype and Hanyi.

This comprehensive font family covers over 20 writing systems and supports more than 600 languages.

如何在 Cherry Studio 使用联网模式

This document was translated from Chinese by AI and has not yet been reviewed.

In the Cherry Studio question window, click the 【Globe】 icon to enable internet access.

Mode 1: Models with built-in internet function from providers

When using such models, enabling internet access requires no extra steps - it's straightforward.

Quickly identify internet-enabled models by checking for a small globe icon next to the model name above the chat interface.

This method also helps quickly distinguish internet-enabled models in the Model Management page.

Cherry Studio currently supports internet-enabled models from

Google Gemini

OpenRouter (all models support internet)

Tencent Hunyuan

Zhipu AI

Alibaba Bailian, etc.

Important note:

Special cases exist where models may access internet without the globe icon, as explained in the tutorial below.

Mode 2: Models without internet function use Tavily service

When using models without built-in internet (no globe icon), use Tavily search service to process real-time information.

First-time Tavily setup triggers a setup prompt - simply follow the instructions!

After clicking, you'll be redirected to Tavily's website to register/login. Create and copy your API key back to Cherry Studio.

Registration guide available in Tavily tutorial within this documentation directory.

Tavily registration reference:

The following interface confirms successful registration.

Test again for results: shows normal internet search with default result count (5).

Note: Tavily has monthly free tier limits - exceeding requires payment~~

PS: Please report any issues you encounter.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio not only integrates mainstream AI model services but also empowers you with powerful customization capabilities. Through the Custom AI Providers feature, you can easily integrate any AI model you require.

Flexibility: Break free from predefined provider lists and freely choose the AI models that best suit your needs.

Diversity: Experiment with various AI models from different platforms to discover their unique advantages.

Controllability: Directly manage your API keys and access addresses to ensure security and privacy.

Customization: Integrate privately deployed models to meet the demands of specific business scenarios.

Add your custom AI provider to Cherry Studio in just a few simple steps:

Open Settings: Click the "Settings" (gear icon) in the left navigation bar of the Cherry Studio interface.

Enter Model Services: Select the "Model Services" tab in the settings page.

Add Provider: On the "Model Services" page, you'll see existing providers. Click the "+ Add" button below the list to open the "Add Provider" pop-up.

Fill in Information: In the pop-up, provide the following details:

Provider Name: Give your custom provider a recognizable name (e.g., MyCustomOpenAI).

Provider Type: Select your provider type from the dropdown menu. Currently supports:

OpenAI

Gemini

Anthropic

Azure OpenAI

Save Configuration: After filling in the details, click the "Add" button to save your configuration.

After adding, locate your newly added provider in the list and configure it:

Activation Status: Toggle the activation switch on the far right of the list to enable this custom service.

API Key:

Enter the API key provided by your AI provider.

Click the "Test" button to verify the key's validity.

API Address:

Enter the base URL to access the AI service.

Always refer to your AI provider's official documentation for the correct API address.

Model Management:

Click the "+ Add" button to manually add model IDs you want to use under this provider (e.g., gpt-3.5-turbo, gemini-pro).

If unsure about specific model names, consult your AI provider's official documentation.

Click the "Manage" button to edit or delete added models.

After completing the above configurations, you can select your custom AI provider and model in Cherry Studio's chat interface and start conversing with AI!

vLLM is a fast and easy-to-use LLM inference library similar to Ollama. Here's how to integrate vLLM into Cherry Studio:

Install vLLM: Follow vLLM's official documentation (https://docs.vllm.ai/en/latest/getting_started/quickstart.html) to install vLLM.

pip install vllm # if using pip

uv pip install vllm # if using uvLaunch vLLM Service: Start the service using vLLM's OpenAI-compatible interface via two main methods:

Using vllm.entrypoints.openai.api_server

python -m vllm.entrypoints.openai.api_server --model gpt2Using uvicorn

vllm --model gpt2 --served-model-name gpt2Ensure the service launches successfully, listening on the default port 8000. You can also specify a different port using the --port parameter.

Add vLLM Provider in Cherry Studio:

Follow the steps above to add a new custom AI provider.

Provider Name: vLLM

Provider Type: Select OpenAI.

Configure vLLM Provider:

API Key: Leave this field blank or enter any value since vLLM doesn't require an API key.

API Address: Enter vLLM's API address (default: http://localhost:8000/, adjust if using a different port).

Model Management: Add the model name loaded in vLLM (e.g., gpt2 for the command python -m vllm.entrypoints.openai.api_server --model gpt2).

Start Chatting: Now select the vLLM provider and the gpt2 model in Cherry Studio to chat with the vLLM-powered LLM!

Read Documentation Carefully: Before adding custom providers, thoroughly review your AI provider's official documentation for API keys, addresses, model names, etc.

Test API Keys: Use the "Test" button to quickly verify API key validity.

Verify API Addresses: Different providers and models may have varying API addresses—ensure correctness.

Add Models Judiciously: Only add models you'll actually use to avoid cluttering.

This document was translated from Chinese by AI and has not yet been reviewed.

ModelScope is a new generation open-source Model-as-a-Service (MaaS) sharing platform, dedicated to providing flexible, easy-to-use, and low-cost one-stop model service solutions for general AI developers, making model application simpler!

Through its API-Inference as a Service capability, the platform standardizes open-source models into callable API interfaces, allowing developers to easily and quickly integrate model capabilities into various AI applications, supporting innovative scenarios such as tool invocation and prototype development.

✅ Free Quota: Provides 2000 free API calls daily (Billing Rules)

✅ Rich Model Library: Covers 1000+ open-source models including NLP, CV, Speech, Multimodal, etc.

✅ Ready-to-Use: No deployment needed, quick invocation via RESTful API

Log In to the Platform

Visit ModelScope Official Website → Click Log In at the top right → Select authentication method

Create Access Token

Click New Token → Fill in description → Copy the generated token (Page example shown below)

🔑 Important Tip: Token leakage will affect account security!

Open Cherry Studio → Settings → Model Service → ModelScope

Paste the copied token into the API Key field

Click Save to complete authorization

Find Models Supporting API

Visit ModelScope Model Library

Filter: Check API-Inference (or look for the API icon on the model card)

The scope of models covered by API-Inference is primarily determined by their popularity within the Moda community (referencing data such as likes and downloads). Therefore, the list of supported models will continue to iterate after the release of more powerful and highly-regarded next-generation open-source models.

Get Model ID

Go to the target model's detail page → Copy Model ID (format like damo/nlp_structbert_sentiment-classification_chinese-base)

Fill into Cherry Studio

On the model service configuration page, enter the ID in the Model ID field → Select task type → Complete configuration

🎫 Free Quota: 2000 API calls per user daily (*Subject to the latest rules on the official website)

🔁 Quota Reset: Automatically resets daily at UTC+8 00:00, does not support cross-day accumulation or upgrade

💡 Over-quota Handling:

After reaching the daily limit, the API will return a 429 error

Solution: Switch to a backup account / Use another platform / Optimize call frequency

Log in to ModelScope → Click Username at the top right → API Usage

⚠️ Note: Inference API-Inference has a free daily quota of 2000 calls. For more calling needs, consider using cloud services like Alibaba Cloud Bailian.

This document was translated from Chinese by AI and has not yet been reviewed.

By customizing CSS, you can modify the software's appearance to better suit your preferences, like this:

:root {

--color-background: #1a462788;

--color-background-soft: #1a4627aa;

--color-background-mute: #1a462766;

--navbar-background: #1a4627;

--chat-background: #1a4627;

--chat-background-user: #28b561;

--chat-background-assistant: #1a462722;

}

#content-container {

background-color: #2e5d3a !important;

}:root {

font-family: "汉仪唐美人" !important; /* Font */

}

/* Color of expanded deep thinking text */

.ant-collapse-content-box .markdown {

color: red;

}

/* Theme variables */

:root {

--color-black-soft: #2a2b2a; /* Dark background color */

--color-white-soft: #f8f7f2; /* Light background color */

}

/* Dark theme */

body[theme-mode="dark"] {

/* Colors */

--color-background: #2b2b2b; /* Dark background color */

--color-background-soft: #303030; /* Light background color */

--color-background-mute: #282c34; /* Neutral background color */

--navbar-background: var(-–color-black-soft); /* Navigation bar background */

--chat-background: var(–-color-black-soft); /* Chat background */

--chat-background-user: #323332; /* User chat background */

--chat-background-assistant: #2d2e2d; /* Assistant chat background */

}

/* Dark theme specific styles */

body[theme-mode="dark"] {

#content-container {

background-color: var(-–chat-background-assistant) !important; /* Content container background */

}

#content-container #messages {

background-color: var(-–chat-background-assistant); /* Messages background */

}

.inputbar-container {

background-color: #3d3d3a; /* Input bar background */

border: 1px solid #5e5d5940; /* Input bar border color */

border-radius: 8px; /* Input bar border radius */

}

/* Code styles */

code {

background-color: #e5e5e20d; /* Code background */

color: #ea928a; /* Code text color */

}

pre code {

color: #abb2bf; /* Preformatted code text color */

}

}

/* Light theme */

body[theme-mode="light"] {

/* Colors */

--color-white: #ffffff; /* White */

--color-background: #ebe8e2; /* Light background */

--color-background-soft: #cbc7be; /* Light background */

--color-background-mute: #e4e1d7; /* Neutral background */

--navbar-background: var(-–color-white-soft); /* Navigation bar background */

--chat-background: var(-–color-white-soft); /* Chat background */

--chat-background-user: #f8f7f2; /* User chat background */

--chat-background-assistant: #f6f4ec; /* Assistant chat background */

}

/* Light theme specific styles */

body[theme-mode="light"] {

#content-container {

background-color: var(-–chat-background-assistant) !important; /* Content container background */

}

#content-container #messages {

background-color: var(-–chat-background-assistant); /* Messages background */

}

.inputbar-container {

background-color: #ffffff; /* Input bar background */

border: 1px solid #87867f40; /* Input bar border color */

border-radius: 8px; /* Input bar border radius */

}

/* Code styles */

code {

background-color: #3d39290d; /* Code background */

color: #7c1b13; /* Code text color */

}

pre code {

color: #000000; /* Preformatted code text color */

}

}For more theme variables, refer to the source code: https://github.com/CherryHQ/cherry-studio/tree/main/src/renderer/src/assets/styles

Cherry Studio Theme Library: https://github.com/boilcy/cherrycss

Share some Chinese-style Cherry Studio theme skins: https://linux.do/t/topic/325119/129

This document was translated from Chinese by AI and has not yet been reviewed.

Trace provides users with dialogue insights, helping them understand the specific performance of models, knowledge bases, MCP, web search, etc., during the conversation process. It is an observability tool implemented based on OpenTelemetry, which enables visualization through client-side data collection, storage, and processing, providing quantitative evaluation basis for problem localization and performance optimization.

Each conversation corresponds to one trace data, and a trace is composed of multiple spans. Each span corresponds to a program processing logic in Cherry Studio, such as calling a model session, calling MCP, calling a knowledge base, calling web search, etc. Traces are displayed in a tree structure, with spans as tree nodes. The main data includes time consumption and token usage. Of course, the specific input and output can also be viewed in the span details.

By default, after Cherry Studio is installed, Trace is hidden. It needs to be enabled in "Settings" - "General Settings" - "Developer Mode", as shown below:

Also, Trace records will not be generated for previous sessions; they will only be generated after new questions and answers occur. The generated records are stored locally. If you need to clear Trace completely, you can do so by going to "Settings" - "Data Settings" - "Data Directory" - "Clear Cache", or by manually deleting files under ~/.cherrystudio/trace, as shown below:

Click "Trace" in the Cherry Studio chat window to view the full trace data. Regardless of whether a model, web search, knowledge base, or MCP was called during the conversation, you can view the full trace data in the trace window.

If you want to view the details of a model in the trace, click on the model call node to view its input and output details.

If you want to view the details of a web search in the trace, click on the web search call node to view its input and output details. In the details, you can see the question queried by the web search and its returned results.

If you want to view the details of a knowledge base in the trace, click on the knowledge base call node to view its input and output details. In the details, you can see the question queried by the knowledge base and its returned answer.

If you want to view the details of MCP in the trace, click on the MCP call node to view its input and output details. In the details, you can see the input parameters for calling this MCP Server tool and the tool's return.

This feature is provided by the Alibaba Cloud EDAS team. If you have any questions or suggestions, please join the DingTalk group (Group ID: 21958624) for in-depth communication with the developers.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio is a multi-model desktop client currently supporting installation packages for Windows, Linux, and macOS systems. It integrates mainstream LLM models to provide multi-scenario assistance. Users can enhance work efficiency through smart conversation management, open-source customization, and multi-theme interfaces.

Cherry Studio is now deeply integrated with PPIO's High-Performance API Channel – leveraging enterprise-grade computing power to ensure high-speed response for DeepSeek-R1/V3 and 99.9% service availability, delivering a fast and smooth experience.

The tutorial below provides a complete integration solution (including API key configuration), enabling the advanced mode of Cherry Studio Intelligent Scheduling + PPIO High-Performance API within 3 minutes.

First download Cherry Studio from the official website: (If inaccessible, download your required version from Quark Netdisk: )

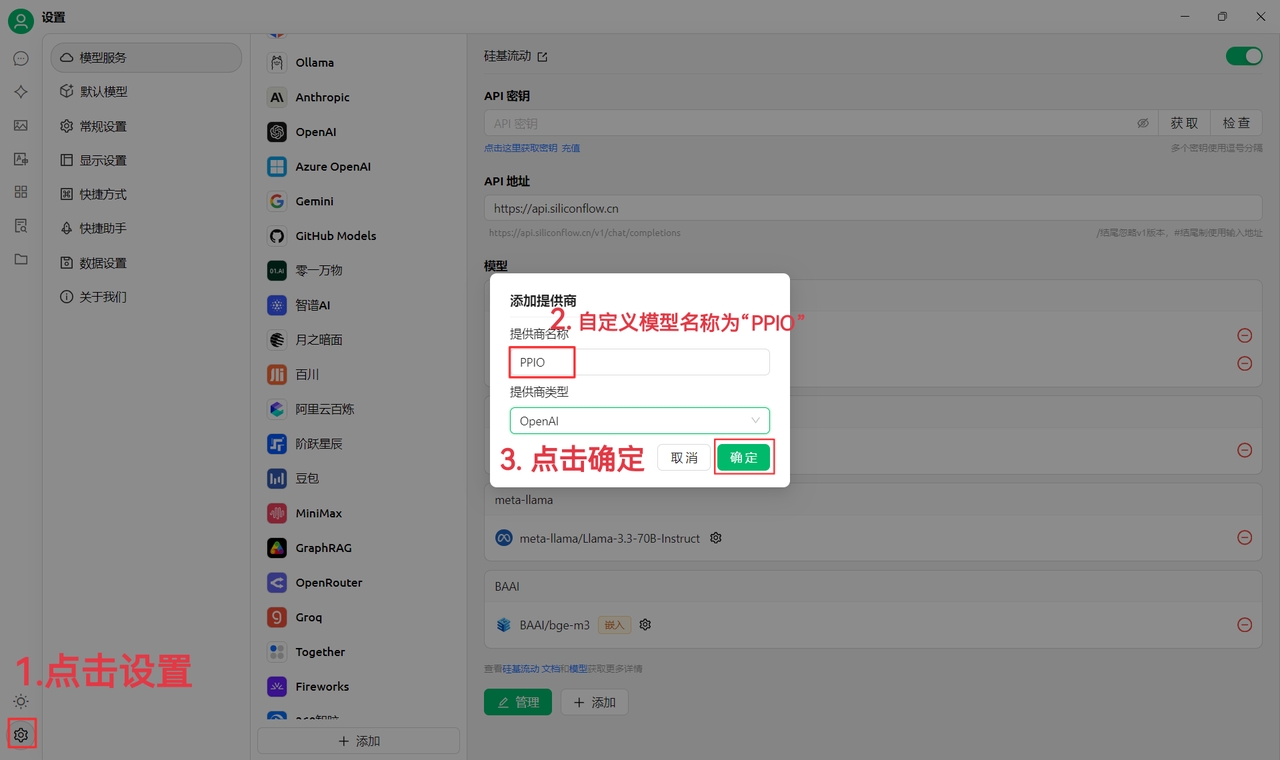

(1) Click the settings icon in the bottom left corner, set the provider name to PPIO, and click "OK"

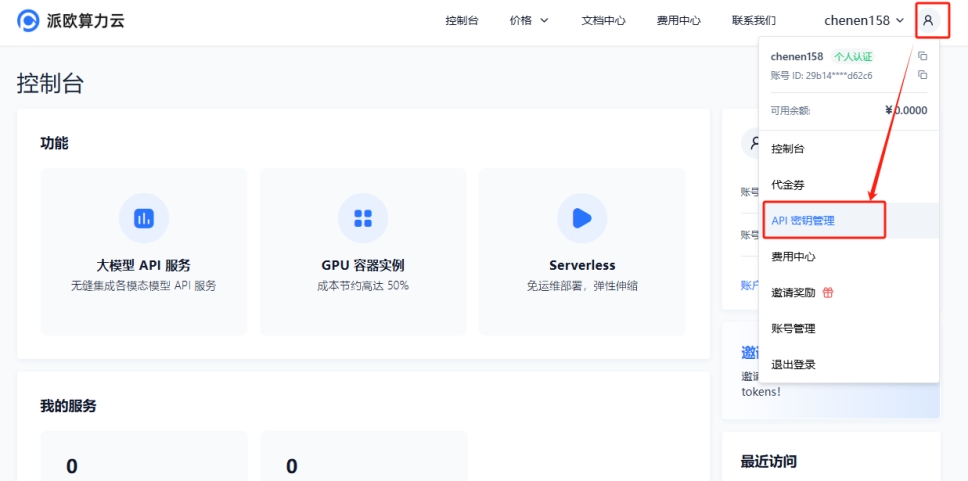

(2) Visit , click 【User Avatar】→【API Key Management】 to enter console

Click 【+ Create】 to generate a new API key. Customize a key name. Generated keys are visible only at creation – immediately copy and save them to avoid affecting future usage

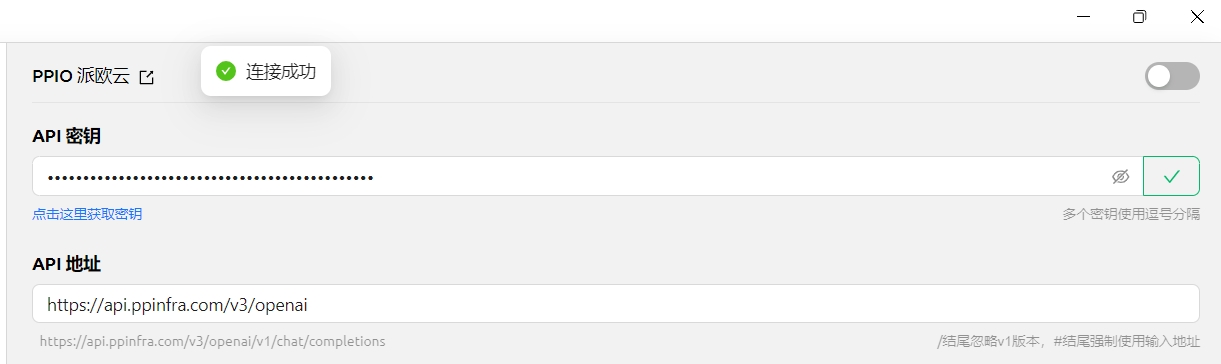

(3) In Cherry Studio settings, select 【PPIO Paiou Cloud】, enter the API key generated on the official website, then click 【Verify】

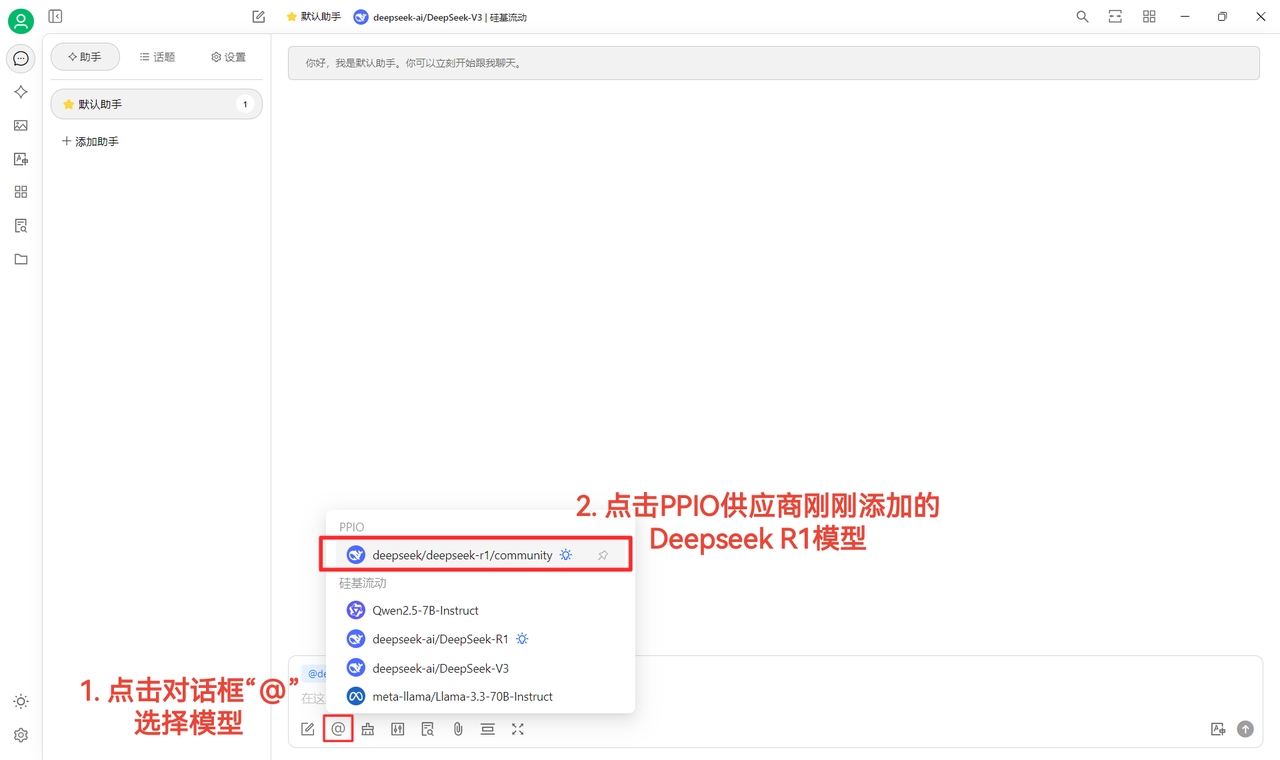

(4) Select model: using deepseek/deepseek-r1/community as example. Switch directly if needing other models.

DeepSeek R1 and V3 community versions are for trial use only. They are full-parameter models with identical stability and performance. For high-volume usage, top up and switch to non-community versions.

(1) After clicking 【Verify】 and seeing successful connection, it's ready for use

(2) Finally, click 【@】 and select the newly added DeepSeek R1 model under PPIO provider to start chatting~

【Partial material source: 】

For visual learners, we've prepared a Bilibili video tutorial. Follow step-by-step instructions to quickly master PPIO API + Cherry Studio configuration. Click the link to jump directly: →

【Video material source: sola】

This document was translated from Chinese by AI and has not yet been reviewed.

To allow every developer and user to easily experience the capabilities of cutting-edge large models, Zhipu has made the GLM-4.5-Air model freely available to Cherry Studio users. As an efficient foundational model specifically designed for agent applications, GLM-4.5-Air strikes an excellent balance between performance and cost, making it an ideal choice for building intelligent applications.

🚀 What is GLM-4.5-Air?

GLM-4.5-Air is Zhipu's latest high-performance language model, featuring an advanced Mixture-of-Experts (MoE) architecture. It significantly reduces computational resource consumption while maintaining excellent inference capabilities.

Total Parameters: 106 Billion

Active Parameters: 12 Billion

Through its streamlined design, GLM-4.5-Air achieves higher inference efficiency, making it suitable for deployment in resource-constrained environments while still capable of handling complex tasks.

📚 Unified Training Process, Solidifying Intelligent Foundations

GLM-4.5-Air shares a consistent training process with its flagship series, ensuring it possesses a solid foundation of general capabilities:

Large-scale Pre-training: Trained on up to 15 trillion tokens of general corpus to build extensive knowledge comprehension abilities;

Specialized Domain Optimization: Enhanced training on key tasks such as code generation, logical reasoning, and agent interaction;

Long Context Support: Context length extended to 128K tokens, capable of processing long documents, complex dialogues, or large code projects;

Reinforcement Learning Enhancement: RL optimization improves the model's decision-making capabilities in inference planning, tool calling, and other aspects.

This training system endows GLM-4.5-Air with excellent generalization and task adaptation capabilities.

⚙️ Core Capabilities Optimized for Agents

GLM-4.5-Air is deeply adapted for agent application scenarios, offering the following practical capabilities:

✅ Tool Calling Support: Can call external tools via standardized interfaces to automate tasks ✅ Web Browsing and Information Extraction: Can work with browser plugins to understand and interact with dynamic content ✅ Software Engineering Assistance: Supports requirements parsing, code generation, defect identification, and repair ✅ Front-end Development Support: Has a good understanding and generation capability for front-end technologies such as HTML, CSS, and JavaScript

This model can be flexibly integrated into code agent frameworks like Claude Code and Roo Code, or used as the core engine for any custom Agent.

💡 Intelligent "Thinking Mode" for Flexible Response to Various Requests

GLM-4.5-Air supports a hybrid inference mode, allowing users to control whether deep thinking is enabled via the thinking.type parameter:

``enabled`: Enables thinking, suitable for complex tasks requiring step-by-step reasoning or planning

``disabled`: Disables thinking, used for simple queries or immediate responses

Default setting is dynamic thinking mode, where the model automatically determines if deep analysis is needed

🌟 High Efficiency, Low Cost, Easier Deployment

GLM-4.5-Air achieves an excellent balance between performance and cost, making it particularly suitable for real-world business deployment:

⚡ Generation speed exceeds 100 tokens/second, offering rapid response and supporting low-latency interaction

💰 Extremely low API cost: Input only 0.8 RMB/million tokens, output 2 RMB/million tokens

🖥️ Fewer active parameters, lower computing power requirements, easy for high-concurrency operation locally or in the cloud

Truly achieving an AI service experience that is "high-performance, low-barrier."

🧠 Focus on Practical Capabilities: Intelligent Code Generation

GLM-4.5-Air performs stably in code generation, supporting:

Covering mainstream languages such as Python, JavaScript, and Java

Generating clear, maintainable code based on natural language instructions

Reducing templated output, aligning closely with real development scenario needs

Applicable to high-frequency development tasks such as rapid prototyping, automated completion, and bug fixing.

Experience GLM-4.5-Air for free now and start your agent development journey! Whether you want to build automated assistants, programming companions, or explore next-generation AI applications, GLM-4.5-Air will be your efficient and reliable AI engine.

📘 Get started now and unleash your creativity!

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio data storage follows system specifications. Data is automatically placed in the user directory at the following locations:

macOS: /Users/username/Library/Application Support/CherryStudioDev

Windows: C:\Users\username\AppData\Roaming\CherryStudio

Linux: /home/username/.config/CherryStudio

You can also view it at:

Method 1:

This can be achieved by creating a symbolic link. Exit the application, move the data to your desired location, then create a link at the original location pointing to the new path.

For detailed steps, refer to:

Method 2: Based on Electron application characteristics, modify the storage location by configuring launch parameters.

--user-data-dir Example: Cherry-Studio-*-x64-portable.exe --user-data-dir="%user_data_dir%"

Example:

init_cherry_studio.bat (encoding: ANSI)

Initial structure of user-data-dir:

This document was translated from Chinese by AI and has not yet been reviewed.

The well-known MaaS service platform "SiluFlow" provides free access to the Qwen3-8B model call service for everyone. As a cost-effective member of the Tongyi Qianwen Qwen3 series, Qwen3-8B achieves powerful capabilities in a compact size, making it an ideal choice for intelligent applications and efficient development.

🚀 What is Qwen3-8B?

Qwen3-8B is an 8-billion parameter dense model in the third-generation large model series of Tongyi Qianwen, released by Alibaba in April 2025. It adopts the Apache 2.0 open-source license and can be freely used for commercial and research scenarios.

Total Parameters: 8 billion

Architecture Type: Dense (pure dense structure)

Context Length: 128K tokens

Multilingual Support: Covers 119 languages and dialects

Despite its compact size, Qwen3-8B demonstrates stable performance in inference, code, mathematics, and Agent capabilities, comparable to larger previous-generation models, showing extremely high practicality in real-world applications.

📚 Powerful Training Foundation, Small Model with Big Wisdom

Qwen3-8B is pre-trained on approximately 36 trillion tokens of high-quality multilingual data, covering web text, technical documents, code repositories, and synthetic data from professional fields, providing extensive knowledge coverage.

The subsequent training phase introduced a four-stage reinforcement process, specifically optimizing the following capabilities:

✅ Natural language understanding and generation ✅ Mathematical reasoning and logical analysis ✅ Multilingual translation and expression ✅ Tool calling and task planning

Thanks to the comprehensive upgrade of the training system, Qwen3-8B's actual performance approaches or even surpasses Qwen2.5-14B, achieving a significant leap in parameter efficiency.

💡 Hybrid Inference Mode: Think or Respond Quickly?

Qwen3-8B supports flexible switching between "Thinking Mode" and "Non-Thinking Mode", allowing users to independently choose the response method based on task complexity.

Control modes via the following methods:

API Parameter Setting: enable_thinking=True/False

Prompt Instruction: Add /think or /no_think to the input

This design allows users to freely balance response speed and inference depth, enhancing the user experience.

⚙️ Native Agent Capability Support, Empowering Intelligent Applications

Qwen3-8B possesses excellent Agent capabilities and can be easily integrated into various automation systems:

🔹 Function Calling: Supports structured tool calling 🔹 MCP Protocol Compatibility: Natively supports the Model Context Protocol, facilitating extension of external capabilities 🔹 Multi-tool Collaboration: Can integrate plugins for search, calculators, code execution, etc.

It is recommended to use it in conjunction with the Qwen-Agent framework to quickly build intelligent assistants with memory, planning, and execution capabilities.

🌐 Extensive Language Support for Global Applications

Qwen3-8B supports 119 languages and dialects, including Chinese, English, Arabic, Spanish, Japanese, Korean, Indonesian, etc., making it suitable for international product development, cross-language customer service, multilingual content generation, and other scenarios.

Its understanding of Chinese is particularly outstanding, supporting simplified, traditional, and Cantonese expressions, making it suitable for the markets in Hong Kong, Macao, Taiwan, and overseas Chinese communities.

🧠 Strong Practical Capabilities, Wide Scenario Coverage

Qwen3-8B performs excellently in various high-frequency application scenarios:

✅ Code Generation: Supports mainstream languages such as Python, JavaScript, and Java, capable of generating runnable code based on requirements ✅ Mathematical Reasoning: Stable performance in benchmarks like GSM8K, suitable for educational applications ✅ Content Creation: Writes emails, reports, and copy with clear structure and natural language ✅ Intelligent Assistant: Can build lightweight AI assistants for personal knowledge base Q&A, schedule management, information extraction, etc.

Experience Qwen3-8B for free now through SiluFlow and start your journey with lightweight AI applications!

📘 Use now to make AI accessible!

This document was translated from Chinese by AI and has not yet been reviewed.

Ollama is an excellent open-source tool that allows you to easily run and manage various large language models (LLMs) locally. Cherry Studio now supports Ollama integration, enabling you to interact directly with locally deployed LLMs through the familiar interface without relying on cloud services!

Ollama is a tool that simplifies the deployment and use of large language models (LLMs). It has the following features:

Local Operation: Models run entirely on your local computer without requiring internet connectivity, protecting your privacy and data security.