Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

This document was translated from Chinese by AI and has not yet been reviewed.

Note: Installing Cherry Studio is not supported on Windows 7.

Installer Version (Setup)

Portable Version (Portable)

This document was translated from Chinese by AI and has not yet been reviewed.

Follow our social accounts: Twitter(X), Xiaohongshu, Weibo, Bilibili, Douyin

Join our communities: QQ Group(575014769), Telegram, Discord, WeChat Group(click to view)

Cherry Studio is an all-in-one AI assistant platform integrating multi-model conversations, knowledge base management, AI painting, translation, and more. Cherry Studio's highly customizable design, powerful extensibility, and user-friendly experience make it an ideal choice for professional users and AI enthusiasts. Whether you are a beginner or a developer, you can find suitable AI functions in Cherry Studio to enhance your work efficiency and creativity.

1. Basic Chat Functionality

One Question, Multiple Answers: Supports generating replies from multiple models simultaneously for the same question, allowing users to compare the performance of different models. For details, see Chat Interface.

Automatic Grouping: Conversation records for each assistant are automatically grouped and managed, making it easy for users to quickly find historical conversations.

Conversation Export: Supports exporting full or partial conversations to various formats (e.g., Markdown, Word) for easy storage and sharing.

Highly Customizable Parameters: In addition to basic parameter adjustments, it also supports custom parameters to meet personalized needs.

Assistant Market: Built-in with over a thousand industry-specific assistants, covering fields like translation, programming, and writing, while also supporting user-defined assistants.

Multiple Format Rendering: Supports Markdown rendering, formula rendering, real-time HTML preview, and other functions to enhance content display.

2. Integration of Various Special Features

AI Painting: Provides a dedicated painting panel where users can generate high-quality images through natural language descriptions.

AI Mini-programs: Integrates various free web-based AI tools, allowing direct use without switching browsers.

Translation Function: Supports a dedicated translation panel, in-conversation translation, prompt translation, and other translation scenarios.

File Management: Files from conversations, paintings, and knowledge bases are managed in a unified and classified manner, avoiding tedious searches.

Global Search: Supports quick location of historical records and knowledge base content, improving work efficiency.

3. Unified Management for Multiple Service Providers

Service Provider Model Aggregation: Supports unified calling of models from major service providers like OpenAI, Gemini, Anthropic, and Azure.

Automatic Model Fetching: One-click to get a complete list of models without manual configuration.

Multi-key Polling: Supports rotating multiple API keys to avoid rate limit issues.

Precise Avatar Matching: Automatically matches each model with an exclusive avatar for better recognition.

Custom Service Providers: Supports third-party service providers that comply with specifications like OpenAI, Gemini, and Anthropic, offering strong compatibility.

4. Highly Customizable Interface and Layout

Custom CSS: Supports global style customization to create a unique interface style.

Custom Chat Layout: Supports list or bubble style layouts and allows customization of message styles (e.g., code snippet styles).

Custom Avatars: Supports setting personalized avatars for the software and assistants.

Custom Sidebar Menu: Users can hide or reorder sidebar functions according to their needs to optimize the user experience.

5. Local Knowledge Base System

Multiple Format Support: Supports importing various file formats such as PDF, DOCX, PPTX, XLSX, TXT, and MD.

Multiple Data Source Support: Supports local files, URLs, sitemaps, and even manually entered content as knowledge base sources.

Knowledge Base Export: Supports exporting processed knowledge bases to share with others.

Search and Check Support: After importing a knowledge base, users can perform real-time retrieval tests to check the processing results and segmentation effects.

6. Special Focus Features

Quick Q&A: Summon a quick assistant in any context (e.g., WeChat, browser) to get answers quickly.

Quick Translation: Supports quick translation of words or text from other contexts.

Content Summarization: Quickly summarizes long text content to improve information extraction efficiency.

Explanation: Explains complex issues with one click, without needing complicated prompts.

7. Data Security

Multiple Backup Solutions: Supports local backup, WebDAV backup, and scheduled backups to ensure data safety.

Data Security: Supports fully local usage scenarios, combined with local large models, to avoid data leakage risks.

Beginner-Friendly: Cherry Studio is committed to lowering the technical barrier, allowing even users with no prior experience to get started quickly, focusing on their work, study, or creation.

Comprehensive Documentation: Provides detailed user manuals and FAQs to help users solve problems quickly.

Continuous Iteration: The project team actively responds to user feedback and continuously optimizes features to ensure the project's healthy development.

Open Source and Extensibility: Supports customization and extension through open-source code to meet personalized needs.

Knowledge Management and Query: Quickly build and query exclusive knowledge bases using the local knowledge base feature, suitable for research, education, and other fields.

Multi-model Conversation and Creation: Supports simultaneous conversation with multiple models, helping users quickly obtain information or generate content.

Translation and Office Automation: Built-in translation assistants and file processing functions are suitable for users who need cross-lingual communication or document processing.

AI Painting and Design: Generate images from natural language descriptions to meet creative design needs.

This document was translated from Chinese by AI and has not yet been reviewed.

Log in to Alibaba Cloud Bailian. If you don't have an Alibaba Cloud account, you'll need to register one.

Click the 创建我的 API-KEY (Create My API-KEY) button in the upper right corner.

In the pop-up window, select the default business space (or you can customize it), and you can enter a description if you want.

Click the 确定 (Confirm) button in the lower right corner.

Afterward, you should see a new row added to the list. Click the 查看 (View) button on the right.

Click the 复制 (Copy) button.

Go to Cherry Studio, navigate to Settings → Model Providers → Alibaba Cloud Bailian, find API Key, and paste the copied API key here.

You can adjust the relevant settings as described in Model Providers, and then you can start using it.

This document was translated from Chinese by AI and has not yet been reviewed.

Here you can set the default interface color mode (Light Mode, Dark Mode, or Follow System).

This setting is for the layout of the conversation interface.

Topic Position

Auto-switch to Topic

When this setting is enabled, clicking on the assistant's name will automatically switch to the corresponding topic page.

Show Topic Time

When enabled, the creation time of the topic will be displayed below the topic.

On this page, you can set the software's color theme, page layout, or use for personalized adjustments.

This setting allows for flexible and personalized changes to the interface. For specific methods, please refer to in the advanced tutorials.

This document was translated from Chinese by AI and has not yet been reviewed.

The Agents page is a hub for assistants. Here, you can select or search for the model presets you want. Clicking on a card will add the assistant to the assistant list on the chat page.

You can also edit and create your own assistants on this page.

Click on My, then click on Create Agent to start creating your own assistant.

This document was translated from Chinese by AI and has not yet been reviewed.

When an assistant does not have a default assistant model set, the model selected by default in a new conversation will be the one set here.

The model set here is also used for optimizing prompts and the pop-up text assistant.

After each conversation, a model is called to generate a topic name for the conversation. The model set here is the one used for naming.

The translation function in input boxes for conversations, drawing, etc., and the translation model on the translation interface all use the model set here.

The model used by the quick assistant feature. For details, see Quick Assistant

This document was translated from Chinese by AI and has not yet been reviewed.

Contact us via email at [email protected] to get editor access.

Title: Application for Cherry Studio Docs Editor Role

Body: State your reasons for applying

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio supports configuring the blacklist manually or by adding subscription sources. For configuration rules, please refer to ublacklist.

You can add rules for search results or click the toolbar icon to block specified websites. Rules can be specified using either: match patterns (example: *://*.example.com/*) or regular expressions (example: /example\.(net|org)/).

You can also subscribe to public rule sets. This website lists some subscriptions: https://iorate.github.io/ublacklist/subscriptions

Here are some recommended subscription source links:

https://git.io/ublacklist

Chinese

https://raw.githubusercontent.com/laylavish/uBlockOrigin-HUGE-AI-Blocklist/main/list_uBlacklist.txt

AI-generated

This document was translated from Chinese by AI and has not yet been reviewed.

In Cherry Studio, a single provider supports using multiple keys in a round-robin fashion. The rotation method is a list loop from front to back.

Add multiple keys separated by English commas. For example:

You must use English commas.

When using built-in providers, you generally do not need to fill in the API address. If you need to modify it, please strictly follow the address provided in the corresponding official documentation.

If the address provided by the provider is in the format https://xxx.xxx.com/v1/chat/completions, you only need to fill in the base URL part (https://xxx.xxx.com).

Cherry Studio will automatically append the remaining path (/v1/chat/completions). Failure to fill it in as required may result in it not working correctly.

Usually, clicking the Manage button at the bottom left of the provider configuration page will automatically fetch all models supported by that provider. Click the + sign from the fetched list to add them to the model list.

Click the check button after the API Key input box to test if the configuration is successful.

After successful configuration, be sure to turn on the switch in the upper right corner. Otherwise, the provider will remain disabled, and you will not be able to find the corresponding models in the model list.

This document was translated from Chinese by AI and has not yet been reviewed.

Log in and go to the token page

Create a new token (or you can directly use the default token ↑)

Copy the token

Open CherryStudio's provider settings and click Add at the bottom of the provider list.

Enter a note name, select OpenAI as the provider, and click OK.

Paste the key you just copied.

Go back to the page where you got the API Key and copy the root address from the browser's address bar, for example:

Add models (click Manage to automatically fetch or manually enter them) and toggle the switch in the upper right corner to enable them.

Other OneAPI themes may have different interfaces, but the method for adding them is the same as the process described above.

This document was translated from Chinese by AI and has not yet been reviewed.

Automatically installs MCP services (beta).

A basic implementation of persistent memory based on a local knowledge graph. This allows the model to remember relevant user information across different conversations.

An MCP server implementation that provides tools for dynamic and reflective problem-solving through structured thought processes.

An MCP server implementation that integrates the Brave Search API, providing dual functionality for web and local search.

An MCP server for fetching web page content from a URL.

A Node.js server that implements the Model Context Protocol (MCP) for file system operations.

This document was translated from Chinese by AI and has not yet been reviewed.

Use this method to clear CSS settings when you have set incorrect CSS or cannot enter the settings interface after setting the CSS.

Open the console, click on the CherryStudio window, and press the shortcut key Ctrl+Shift+I (MacOS: command+option+I).

In the console window that pops up, click Console.

Then, manually type document.getElementById('user-defined-custom-css').remove(). Copying and pasting will likely not execute.

After typing, press Enter to confirm and clear the CSS settings. Then, go back to CherryStudio's display settings and delete the problematic CSS code.

This document was translated from Chinese by AI and has not yet been reviewed.

Contact the developer via email: [email protected]

This page only introduces the interface functions. For configuration tutorials, please refer to the tutorial in the basic tutorials.

On the official , click + Create new secret key

Copy the generated key and open CherryStudio's .

The painting feature currently only supports the painting models from SiliconFlow. You can go to to register an account and to use it.

Join the Telegram discussion group for help:

GitHub Issues:

This document was translated from Chinese by AI and has not yet been reviewed.

We welcome contributions to Cherry Studio! You can contribute in the following ways:

Contribute Code: Develop new features or optimize existing code.

Fix Bugs: Submit fixes for bugs you find.

Maintain Issues: Help manage GitHub issues.

Product Design: Participate in design discussions.

Write Documentation: Improve user manuals and guides.

Community Engagement: Join discussions and help users.

Promote Usage: Spread the word about Cherry Studio.

Send an email to [email protected]

Email Subject: Apply to become a developer

Email Body: Reason for application

This document was translated from Chinese by AI and has not yet been reviewed.

Welcome to Cherry Studio (hereinafter referred to as "this software" or "we"). We place a high value on protecting your privacy. This Privacy Policy explains how we handle and protect your personal information and data. Please read and understand this policy carefully before using this software:

To optimize user experience and improve software quality, we may only collect the following anonymous, non-personal information:

• Software version information; • Activity and usage frequency of software features; • Anonymous crash and error log information;

The above information is completely anonymous, does not involve any personally identifiable data, and cannot be associated with your personal information.

To maximize the protection of your privacy and security, we explicitly promise:

• We will not collect, save, transmit, or process the model service API Key information you enter into this software; • We will not collect, save, transmit, or process any conversation data generated during your use of this software, including but not limited to chat content, command information, knowledge base information, vector data, and other custom content; • We will not collect, save, transmit, or process any personally identifiable sensitive information.

This software uses the API Key from a third-party model service provider that you apply for and configure yourself to perform model calls and conversation functions. The model services you use (e.g., large models, API interfaces, etc.) are provided by and are the sole responsibility of the third-party provider you choose. Cherry Studio only acts as a local tool to provide the interface calling function with third-party model services.

Therefore:

• All conversation data generated between you and the large model service is unrelated to Cherry Studio. We do not participate in data storage, nor do we conduct any form of data transmission or relay; • You need to review and accept the privacy policies and related terms of the corresponding third-party model service providers. The privacy policies for these services can be found on the official websites of each provider.

You are solely responsible for any privacy risks that may arise from using third-party model service providers. For specific privacy policies, data security measures, and related liabilities, please refer to the relevant content on the official website of your chosen model service provider. We assume no responsibility for this.

This policy may be adjusted appropriately with software version updates. Please check it regularly. When substantial changes to the policy occur, we will notify you in an appropriate manner.

If you have any questions about the content of this policy or Cherry Studio's privacy protection measures, please feel free to contact us.

Thank you for choosing and trusting Cherry Studio. We will continue to provide you with a secure and reliable product experience.

This document was translated from Chinese by AI and has not yet been reviewed.

For usage of the knowledge base, refer to the Knowledge Base Tutorial in the advanced tutorials.

This document was translated from Chinese by AI and has not yet been reviewed.

Quick Assistant is a convenient tool provided by Cherry Studio that allows you to quickly access AI functions in any application, enabling instant questioning, translation, summarization, and explanation.

Open Settings: Navigate to Settings -> Shortcuts -> Quick Assistant.

Enable the Switch: Find and turn on the switch for Quick Assistant.

Set Shortcut (Optional):

The default shortcut for Windows is Ctrl + E.

The default shortcut for macOS is ⌘ + E.

You can customize the shortcut here to avoid conflicts or to better suit your usage habits.

Invoke: In any application, press your set shortcut (or the default one) to open the Quick Assistant.

Interact: In the Quick Assistant window, you can perform the following actions directly:

Quick Question: Ask the AI any question.

Text Translation: Enter the text you need to translate.

Content Summarization: Input long text for a summary.

Explanation: Enter concepts or terms that need clarification.

Close: Press the ESC key or click anywhere outside the Quick Assistant window to close it.

Shortcut Conflicts: If the default shortcut conflicts with other applications, please modify it.

Explore More Features: In addition to the functions mentioned in the documentation, the Quick Assistant may support other operations, such as code generation, style conversion, etc. It is recommended that you continue to explore during use.

Feedback & Improvement: If you encounter any problems or have any suggestions for improvement during use, please provide feedback to the Cherry Studio team in a timely manner.

macOS 版本安装教程

This document was translated from Chinese by AI and has not yet been reviewed.

First, go to the official website's download page to download the Mac version, or click the direct link below.

Please make sure to download the correct chip version for your Mac.

After the download is complete, click here.

Drag the icon to install.

Go to Launchpad, find the Cherry Studio icon, and click it. If the Cherry Studio main interface opens, the installation is successful.

This document was translated from Chinese by AI and has not yet been reviewed.

Log in and open the token page

Click "Add Token"

Enter a token name and click "Submit" (other settings can be configured as needed).

Open the provider settings in CherryStudio and click Add at the bottom of the provider list.

Enter a memo name, select OpenAI as the provider, and click OK.

Paste the key you just copied.

Go back to the page where you obtained the API Key and copy the base URL from your browser's address bar. For example:

Add models (click "Manage" to fetch them automatically or enter them manually), then enable the switch in the top-right corner to start using them.

This document was translated from Chinese by AI and has not yet been reviewed.

The following uses the fetch feature as an example to demonstrate how to use MCP in Cherry Studio. You can find more details in the documentation.

In Settings - MCP Server, click the Install button to automatically download and install them. Since the downloads are directly from GitHub, the speed might be slow, and there is a high chance of failure. The success of the installation depends on whether the files exist in the folder mentioned below.

Executable Installation Directory:

Windows: C:\Users\YourUsername\.cherrystudio\bin

macOS, Linux: ~/.cherrystudio/bin

If the installation fails:

You can create a symbolic link (soft link) from the corresponding system command to this directory. If the directory does not exist, you need to create it manually. Alternatively, you can manually download the executable files and place them in this directory:

Bun: https://github.com/oven-sh/bun/releases UV: https://github.com/astral-sh/uv/releases

This document was translated from Chinese by AI and has not yet been reviewed.

Open Cherry Studio settings.

Find the MCP Server option.

Click Add Server.

Fill in the relevant parameters for the MCP Server (reference link). The content you may need to fill in includes:

Name: Customize a name, for example, fetch-server

Type: Select STDIO

Command: Fill in uvx

Arguments: Fill in mcp-server-fetch

(There may be other parameters, depending on the specific Server)

Click Save.

After completing the above configuration, Cherry Studio will automatically download the required MCP Server - fetch server. Once the download is complete, we can start using it! Note: If the mcp-server-fetch configuration is unsuccessful, you can try restarting your computer.

Successfully added an MCP server in the MCP Server settings

As you can see from the image above, by integrating MCP's fetch feature, Cherry Studio can better understand the user's query intent, retrieve relevant information from the web, and provide more accurate and comprehensive answers.

This document was translated from Chinese by AI and has not yet been reviewed.

Contact Person: Mr. Wang

📱:18954281942 (Not a customer service number)

For usage inquiries, you can join our user communication group at the bottom of the official website homepage, or email [email protected]

Or submit issues at: https://github.com/CherryHQ/cherry-studio/issues

If you need more guidance, you can join our Knowledge Planet

Commercial license details: https://docs.cherry-ai.com/contact-us/questions/cherrystudio-xu-ke-xie-yi

Windows 版本安装教程

This document was translated from Chinese by AI and has not yet been reviewed.

Note: Cherry Studio cannot be installed on Windows 7.

Click download and select the appropriate version

If the browser prompts that the file is not trusted, choose to keep it.

Choose Keep→Trust Cherry-Studio

This document was translated from Chinese by AI and has not yet been reviewed.

Supports exporting topics and messages to SiYuan Note.

Open SiYuan Note and create a new notebook.

Open the notebook settings and copy the Notebook ID.

Paste the copied Notebook ID into the Cherry Studio settings.

Enter the SiYuan Note address.

Local

Usually http://127.0.0.1:6806

Self-hosted

Your domain, e.g., http://note.domain.com

Copy the SiYuan Note API Token.

Paste it into the Cherry Studio settings and check the connection.

Congratulations, the SiYuan Note configuration is complete ✅ You can now export content from Cherry Studio to your SiYuan Note.

This document was translated from Chinese by AI and has not yet been reviewed.

The Dify Knowledge Base MCP requires upgrading Cherry Studio to v1.2.9 or higher.

Open Search MCP.

Add the dify-knowledge server.

Parameters and environment variables need to be configured

The Dify knowledge base key can be obtained as follows

This document was translated from Chinese by AI and has not yet been reviewed.

To use GitHub Copilot, you first need a GitHub account and a subscription to the GitHub Copilot service. A free version subscription is also acceptable, but the free version does not support the latest Claude 3.7 model. For details, please refer to the official GitHub Copilot website.

Click "Login with GitHub" to get the Device Code and copy it.

After successfully obtaining the Device Code, click the link to open your browser. Log in to your GitHub account in the browser, enter the Device Code, and authorize.

After successful authorization, return to Cherry Studio and click "Connect to GitHub". Upon success, your GitHub username and avatar will be displayed.

Click the "Manage" button below, and it will automatically connect to the internet to fetch the list of currently supported models.

Currently, requests are built using Axios, which does not support SOCKS proxies. Please use a system proxy or an HTTP proxy, or alternatively, do not set a proxy within CherryStudio and use a global proxy instead. First, ensure your network connection is stable to avoid failing to obtain the Device Code.

This document was translated from Chinese by AI and has not yet been reviewed.

Are you experiencing this: having 26 insightful articles saved in your WeChat Favorites that you never open again, more than 10 files scattered in a "study materials" folder on your computer, or trying to find a theory you read six months ago but only remembering a few keywords. When the daily amount of information exceeds your brain's processing limit, 90% of valuable knowledge is forgotten within 72 hours. Now, by building a personal knowledge base with the Infini-AI Large Model Service Platform API + Cherry Studio, you can transform those dust-gathering WeChat articles and fragmented course content into structured knowledge for precise retrieval.

1. Infini-AI API Service: The "Thinking Hub" of Your Knowledge Base, Easy-to-Use and Stable

As the "thinking hub" of the knowledge base, the Infini-AI Large Model Service Platform offers model versions like the full-power DeepSeek R1, providing stable API services. Currently, it's free to use with no barriers after registration. It supports mainstream embedding models like bge and jina for building knowledge bases. The platform also continuously updates with the latest, most powerful, and stable open-source model services, including various modalities such as images, videos, and voice.

2. Cherry Studio: Build a Knowledge Base with Zero Code

Cherry Studio is an easy-to-use AI tool. Compared to the 1-2 month deployment cycle required for RAG knowledge base development, this tool's advantage is its support for zero-code operation. You can import multiple formats like Markdown/PDF/webpages with one click. A 40MB file can be parsed in 1 minute. Additionally, you can add local computer folders, article URLs from WeChat Favorites, and course notes.

Step 1: Basic Preparation

Visit the official Cherry Studio website to download the appropriate version (https://cherry-ai.com/)

Register an account: Log in to the Infini-AI Large Model Service Platform (https://cloud.infini-ai.com/genstudio/model?cherrystudio)

Get API Key: In the "Model Square," select deepseek-r1, click create to get the APIKEY, and copy the model name.

Step 2: Open Cherry Studio settings, select Infini-AI in the Model Service, fill in the API Key, and enable the Infini-AI model service.

After completing the steps above, you can use Infini-AI's API service in Cherry Studio by selecting the desired large model during interaction. For convenience, you can also set a "Default Model" here.

Step 3: Add a Knowledge Base

Select any version of the bge series or jina series embedding models from the Infini-AI Large Model Service Platform.

After importing study materials, enter "Summarize the core formula derivations in Chapter 3 of 'Machine Learning'"

Generated result shown below

This document was translated from Chinese by AI and has not yet been reviewed.

1.2 Click on Settings in the bottom-left corner and select 【SiliconFlow】 under Model Service

1.2 Click the link to get the SiliconCloud API key

Log in to SiliconCloud (if you haven't registered, an account will be automatically created on your first login)

Visit API Keys to create a new key or copy an existing one

1.3 Click Manage to add a model

Click the "Chat" button in the left menu bar

Enter text in the input box to start chatting

You can switch models by selecting the model name in the top menu

This document was translated from Chinese by AI and has not yet been reviewed.

All data added to the Cherry Studio knowledge base is stored locally. During the addition process, a copy of the document will be placed in the Cherry Studio data storage directory.

Vector Database: https://turso.tech/libsql

After a document is added to the Cherry Studio knowledge base, the file will be split into several chunks, and then these chunks will be processed by an embedding model.

When using a large model for Q&A, text chunks related to the question will be retrieved and sent to the large language model for processing.

If you have data privacy requirements, it is recommended to use a local embedding database and a local large language model.

This document was translated from Chinese by AI and has not yet been reviewed.

Automatic MCP installation requires upgrading Cherry Studio to v1.1.18 or a higher version.

In addition to manual installation, Cherry Studio has a built-in tool, @mcpmarket/mcp-auto-install, which provides a more convenient way to install MCP servers. You just need to input the corresponding command in a large model conversation that supports MCP services.

Beta Phase Reminder:

@mcpmarket/mcp-auto-install is still in its beta phase.

The effectiveness depends on the "intelligence" of the large model. Some configurations will be added automatically, while others may still require manual changes to certain parameters in the MCP settings.

Currently, the search source is @modelcontextprotocol, which you can configure yourself (explained below).

For example, you can enter:

The system will automatically recognize your request and complete the installation via @mcpmarket/mcp-auto-install. This tool supports various types of MCP servers, including but not limited to:

filesystem

fetch

sqlite

and more...

The

MCP_PACKAGE_SCOPESvariable allows you to customize the MCP service search source. The default value is:@modelcontextprotocol, which can be configured.

@mcpmarket/mcp-auto-install LibraryThis document was translated from Chinese by AI and has not yet been reviewed.

Create an API Key.

After successful creation, click the eye icon next to the newly created API Key to reveal and copy it.

Paste the copied API Key into CherryStudio, then turn on the provider switch.

Click Add, and paste the previously obtained Model ID into the Model ID text box.

Follow this process to add models one by one.

There are two ways to write the API address:

The first is the client default: https://ark.cn-beijing.volces.com/api/v3/

The second way is: https://ark.cn-beijing.volces.com/api/v3/chat/completions#

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio supports importing topics into a Notion database.

Create an integration.

Name: Cherry Studio

Type: Select the first one

Icon: You can save this image

Copy the secret token and paste it into the Cherry Studio settings.

If your Notion database URL looks like this:

https://www.notion.so/<long_hash_1>?v=<long_hash_2>

Then the Notion database ID is the <long_hash_1> part.

Fill in the Page Title Field Name:

If your web page is in English, enter Name

If your web page is in Chinese, enter 名称

Congratulations, your Notion configuration is complete ✅ You can now export content from Cherry Studio to your Notion database.

This document was translated from Chinese by AI and has not yet been reviewed.

ModelScope MCP Server requires upgrading Cherry Studio to v1.2.9 or higher.

In version v1.2.9, Cherry Studio officially partnered with ModelScope, significantly simplifying the process of adding MCP servers. This helps avoid configuration errors and allows you to discover a vast number of MCP servers within the ModelScope community. Follow the steps below to learn how to sync ModelScope's MCP servers in Cherry Studio.

Click on MCP Server Settings in the settings, and select Sync Server.

Select ModelScope and browse to discover MCP services.

Register and log in to ModelScope, and view the MCP service details.

In the MCP service details, select "Connect Service".

Click "Get API Token" in Cherry Studio, which will redirect you to the official ModelScope website. Copy the API token and paste it back into Cherry Studio.

In the MCP server list in Cherry Studio, you can see the MCP service connected from ModelScope and call it in conversations.

For new MCP servers connected on the ModelScope webpage later, simply click Sync Server to add them incrementally.

By following the steps above, you have successfully learned how to easily sync MCP servers from ModelScope in Cherry Studio. The entire configuration process is not only greatly simplified, effectively avoiding the hassle and potential errors of manual configuration, but it also allows you to easily access the vast MCP server resources provided by the ModelScope community.

Start exploring and using these powerful MCP services to bring more convenience and possibilities to your Cherry Studio experience

MCP (Model Context Protocol) is an open-source protocol designed to provide context information to Large Language Models (LLMs) in a standardized way. For more information about MCP, please see

@mcpmarket/mcp-auto-install is an open-source npm package. You can view its detailed information and documentation on the . @mcpmarket is the official collection of MCP services for Cherry Studio.

Log in to

Click

Click on at the bottom of the sidebar.

In the at the bottom of the Ark console sidebar, activate the models you need. You can activate models like the Doubao series and DeepSeek as needed.

In the , find the Model ID corresponding to the desired model.

Open Cherry Studio's settings and find Volcano Engine.

For the difference between endings with / and #, refer to the API Address section in the provider settings documentation, .

Go to the website to create a new integration.

Go to the website and create a new page. Select the database type, name it Cherry Studio, and follow the illustration to connect.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio is a free and open-source project. As the project grows, the workload for the project team has also increased. To reduce communication costs and resolve your issues quickly and efficiently, we hope that you will follow the steps and methods below to handle problems before asking questions. This will allow the project team more time to focus on project maintenance and development. Thank you for your cooperation!

Most basic questions can be solved by carefully reading the documentation.

For questions about the software's features and usage, you can check the Feature Introduction documentation.

Frequently asked questions are collected on the FAQ page. You can check there first for solutions.

For more complex issues, you can try solving them directly by searching or asking in the search bar.

Be sure to carefully read the content in the hint boxes within each document, as this can help you avoid many problems.

Check or search the GitHub Issues page for similar problems and solutions.

For issues unrelated to the client's functionality (such as model errors, unexpected responses, or parameter settings), it is recommended to first search online for relevant solutions or describe the error message and problem to an AI to find a solution.

If the first two steps did not provide an answer or solve your problem, you can describe your issue in detail and seek help in our official Telegram Channel, Discord Channel, or (Click to Join).

If it's a model error, please provide a complete screenshot of the interface and the console error message. You can censor sensitive information, but the model name, parameter settings, and error content must be visible in the screenshot. To learn how to view console error messages, click here.

If it's a software bug, please provide a specific error description and detailed steps to help developers debug and fix it. If it's an intermittent issue that cannot be reproduced, please describe the relevant scenarios, context, and configuration parameters when the problem occurred in as much detail as possible. In addition, you also need to include platform information (Windows, Mac, or Linux) and the software version number in your problem description.

Requesting Documentation or Providing Suggestions

You can contact @Wangmouuu on our Telegram channel or QQ (1355873789), or send an email to: [email protected].

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio is a multi-model desktop client that currently supports installation packages for Windows, Linux, and macOS. It aggregates mainstream LLM models and provides multi-scenario assistance. Users can improve their work efficiency through intelligent session management, open-source customization, and multi-themed interfaces.

Cherry Studio is now deeply integrated with the PPIO high-performance API channel—ensuring high-speed responses for DeepSeek-R1/V3 and 99.9% service availability through enterprise-grade computing power, bringing you a fast and smooth experience.

The tutorial below provides a complete integration plan (including API key configuration), allowing you to enable the advanced mode of "Cherry Studio Intelligent Scheduling + PPIO High-Performance API" in just 3 minutes.

First, go to the official website to download Cherry Studio: https://cherry-ai.com/download (If you can't access it, you can use the Quark Web Drive link below to download the version you need: https://pan.quark.cn/s/c8533a1ec63e#/list/share

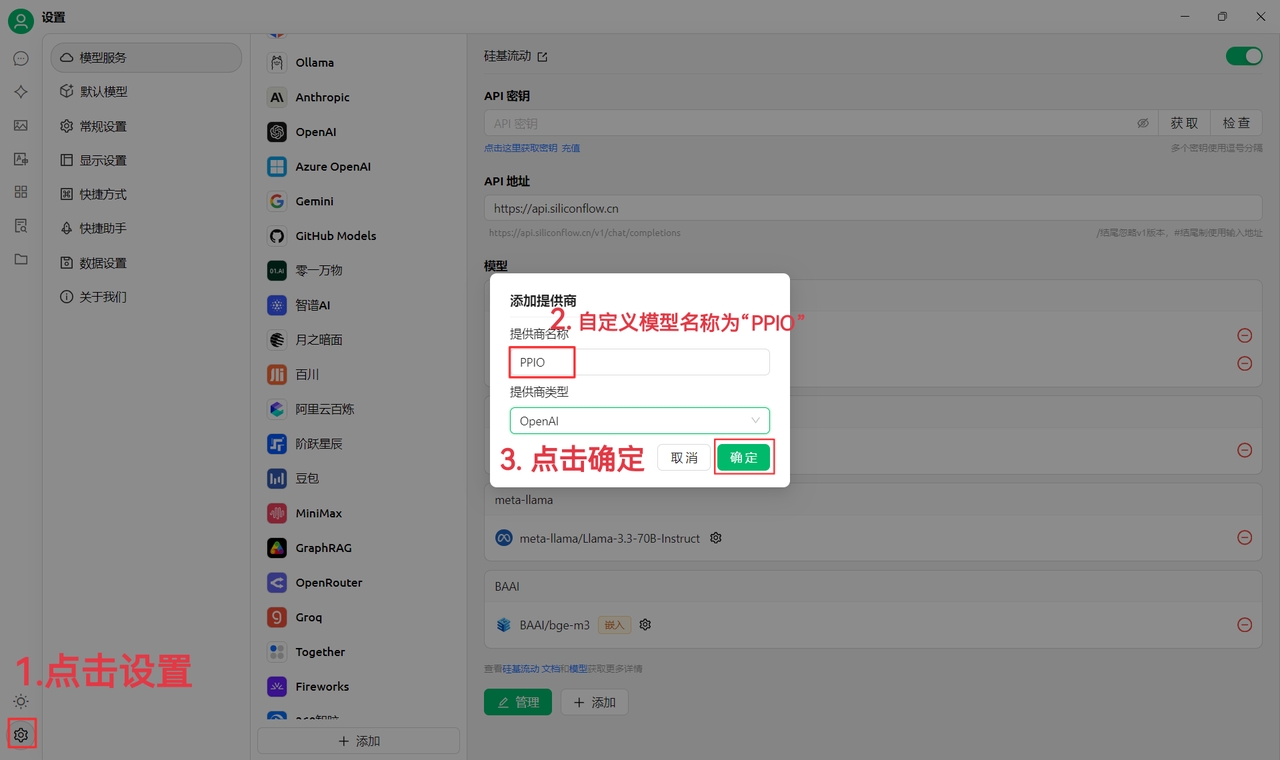

(1) First, click on Settings in the bottom left corner, set the custom provider name to: PPIO, and click "OK"

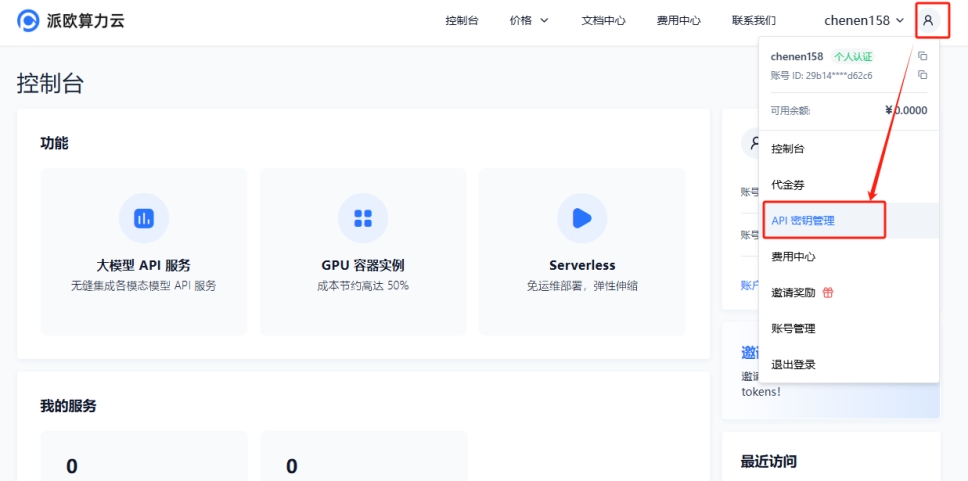

(2) Go to PPIO Compute Cloud API Key Management, click on your [User Avatar] — [API Key Management] to enter the console

Click the [+ Create] button to create a new API key. Give it a custom name. The generated key is only displayed at the time of creation. Be sure to copy and save it to a document to avoid affecting future use.

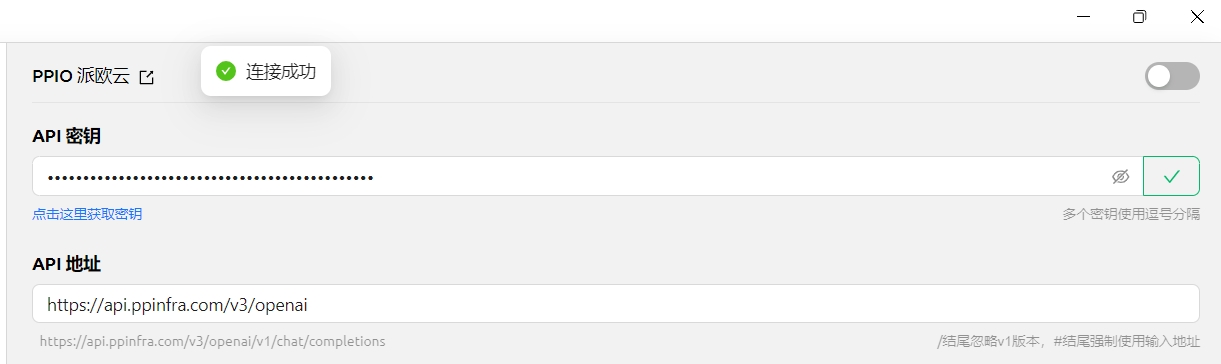

(3) In CherryStudio, enter the API key. Click Settings, select [PPIO Cloud], enter the API key generated on the official website, and finally click [Check].

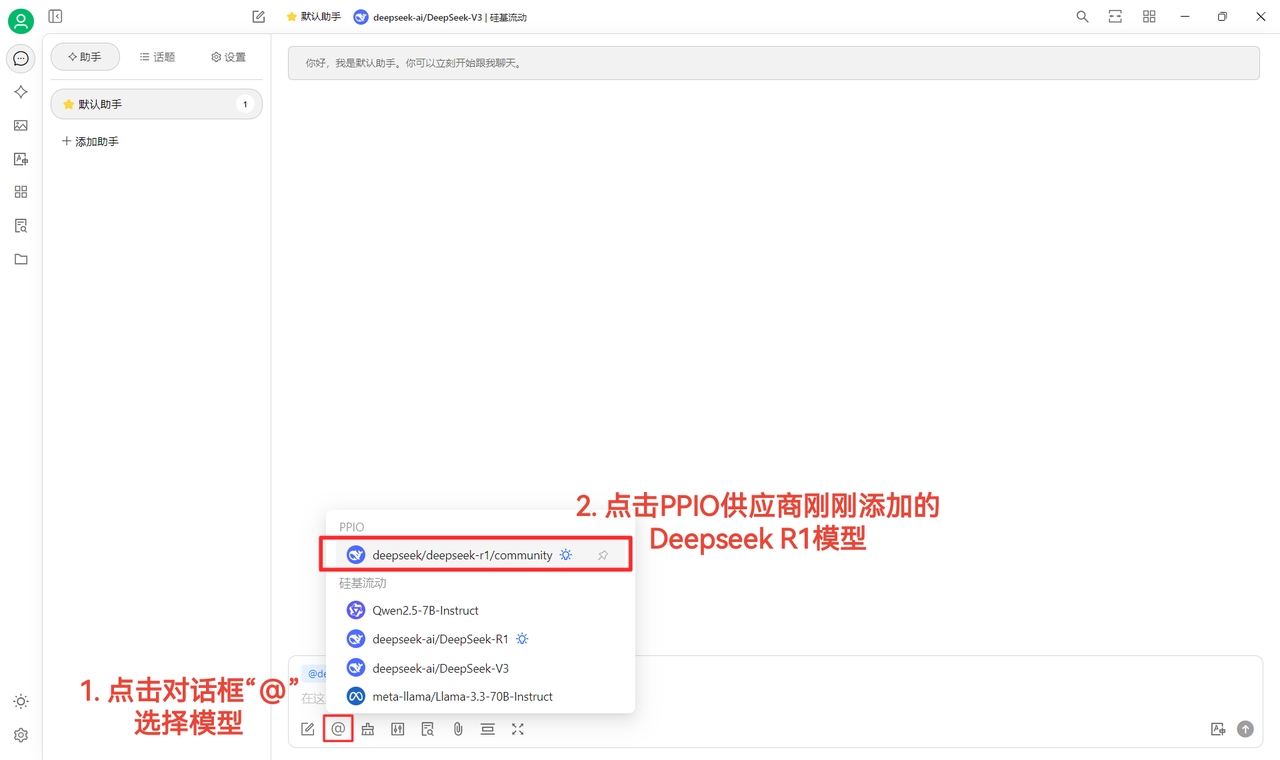

(4) Select the model: For example, deepseek/deepseek-r1/community. If you need to switch to another model, you can do so directly.

The community versions of DeepSeek R1 and V3 are for trial purposes. They are full-parameter models with no difference in stability or performance. For high-volume usage, you must top up your account and switch to a non-community version.

(1) Click [Check]. Once it shows "Connection successful," you can start using it normally.

(2) Finally, click on [@] and select the DeepSeek R1 model you just added under the PPIO provider to start chatting successfully~

[Some materials sourced from: 陈恩]

If you prefer visual learning, we have prepared a video tutorial on Bilibili. This step-by-step guide will help you quickly master the configuration of "PPIO API + Cherry Studio". Click the link below to go directly to the video and start your smooth development experience → 【Still frustrated by DeepSeek's endless loading?】PPIO Cloud + Full-power DeepSeek =? No more congestion, take off now!

[Video material sourced from: sola]

如何在 Cherry Studio 使用联网模式

This document was translated from Chinese by AI and has not yet been reviewed.

In the Cherry Studio question window, click the [Little Globe] icon to enable web search.

Mode 1: The model provider's large model has a built-in web search function

In this case, after enabling web search, you can use the service directly. It's very simple.

You can quickly determine if a model supports web search by checking for a small globe icon next to the model's name at the top of the chat interface.

On the model management page, this method also allows you to quickly distinguish which models support web search and which do not.

Cherry Studio currently supports the following model providers with web search capabilities:

Google Gemini

OpenRouter (all models support web search)

Tencent Hunyuan

Zhipu AI

Alibaba Cloud Bailian, etc.

Special Note:

There is a special case where a model can access the web even without the small globe icon, as explained in the tutorial below.

Mode 2: The model does not have a built-in web search function; use the Tavily service to enable it

When we use a large model without a built-in web search function (no small globe icon next to its name), but we need it to retrieve real-time information for processing, we need to use the Tavily web search service.

When using the Tavily service for the first time, a pop-up will prompt you to configure some settings. Please follow the instructions—it's very simple!

After clicking to get the API key, you will be automatically redirected to the official Tavily website's login/registration page. After registering and logging in, create an API key, then copy the key and paste it into Cherry Studio.

If you don't know how to register, refer to the Tavily web search login and registration tutorial in the same directory as this document.

Tavily registration reference document:

The interface below indicates that the registration was successful.

Let's try again to see the effect. The result shows that the web search is now working correctly, and the number of search results is our default setting: 5.

Note: Tavily has a monthly free usage limit. You will need to pay if you exceed it~~

PS: If you find any errors, please feel free to contact us.

This document was translated from Chinese by AI and has not yet been reviewed.

Go to Huawei Cloud to create an account and log in.

Click this link to enter the Maa S console.

Authorization

Click on Authentication Management in the sidebar, create an API Key (secret key), and copy it.

Then, create a new provider in CherryStudio.

After creation, fill in the secret key.

Click on Model Deployment in the sidebar and claim all models.

Click on Invoke.

Copy the address from ① and paste it into the Provider Address field in CherryStudio, and add a "#" symbol at the end.

And add a "#" symbol at the end.

And add a "#" symbol at the end.

And add a "#" symbol at the end.

And add a "#" symbol at the end.

Why add a "#" symbol? See here

Of course, you can also skip reading that and just follow the tutorial;

You can also fill it in by deleting

v1/chat/completions. As long as you know how to fill it in, any method works. If you don't know how, be sure to follow the tutorial.

Then, copy the model name from ②, and in CherryStudio, click the "+Add" button to create a new model.

Enter the model name. Do not add anything extra or include quotes. Copy it exactly as it is written in the example.

Click the Add Model button to finish adding.

This document was translated from Chinese by AI and has not yet been reviewed.

In version 0.9.1, CherryStudio introduced the long-awaited knowledge base feature.

Below, we will provide detailed instructions for using CherryStudio step-by-step.

In the Model Management service, find a model. You can click "Embedding Model" to filter quickly;

Find the model you need and add it to "My Models".

Knowledge Base Entry: On the left toolbar of CherryStudio, click the knowledge base icon to enter the management page;

Add Knowledge Base: Click "Add" to start creating a knowledge base;

Naming: Enter a name for the knowledge base and add an embedding model, for example, bge-m3, to complete the creation.

Add Files: Click the "Add Files" button to open the file selector;

Select Files: Choose supported file formats like pdf, docx, pptx, xlsx, txt, md, mdx, etc., and open them;

Vectorization: The system will automatically perform vectorization. When it shows "Completed" (green ✓), it means vectorization is finished.

CherryStudio supports adding data in multiple ways:

Folder Directory: You can add an entire folder directory. Files in supported formats within this directory will be automatically vectorized;

URL Link: Supports website URLs, such as https://docs.siliconflow.cn/introduction;

Sitemap: Supports XML-formatted sitemaps, such as https://docs.siliconflow.cn/sitemap.xml;

Plain Text Note: Supports inputting custom content as plain text.

Once files and other materials have been vectorized, you can start querying:

Click the "Search Knowledge Base" button at the bottom of the page;

Enter your query;

The search results will be displayed;

And the match score for each result will be shown.

Create a new topic. In the conversation toolbar, click on the knowledge base icon. A list of created knowledge bases will expand. Select the one you want to reference;

Enter and send your question. The model will return an answer generated from the search results;

Additionally, the referenced data sources will be attached below the answer, allowing for quick access to the source files.

This document was translated from Chinese by AI and has not yet been reviewed.

This interface allows you to perform operations such as cloud and local backup of client data, querying the local data directory, and clearing the cache.

Currently, data backup only supports WebDAV. You can choose a service that supports WebDAV for cloud backup.

Taking Jianguoyun as an Example

Log in to Jianguoyun, click on the username in the upper right corner, and select "Account Info":

Select "Security Options" and click "Add Application"

Enter the application name and generate a random password;

Copy and save the password;

Obtain the server address, account, and password;

In Cherry Studio Settings -> Data Settings, fill in the WebDAV information;

Choose to back up or restore data, and you can set the automatic backup time interval.

Generally, the easiest WebDAV services to get started with are cloud storage providers:

123Pan (Requires membership)

Aliyun Drive (Requires purchase)

Box (Free space is 10GB, single file size limit is 250MB.)

Dropbox (Dropbox offers 2GB for free, and you can get up to 16GB by inviting friends.)

TeraCloud (Free space is 10GB, and an additional 5GB can be obtained through referrals.)

Yandex Disk (Provides 10GB of capacity for free users.)

Next are some services that you need to deploy yourself:

如何注册tavily?

This document was translated from Chinese by AI and has not yet been reviewed.

Visit the official website mentioned above, or go to Cherry Studio -> Settings -> Web Search and click "Get API Key". This will redirect you to the Tavily login/registration page.

If this is your first time, you need to Sign up for an account before you can Log in. Note that the page defaults to the login page.

Click to sign up for an account to enter the following interface. Enter your commonly used email address, or use your Google/GitHub account. Then, enter your password in the next step. This is a standard procedure.

🚨🚨🚨[Crucial Step] After successful registration, there will be a dynamic verification code step. You need to scan a QR code to generate a one-time code to continue.

It's very simple. You have two options at this point.

Download an authenticator app, like Microsoft Authenticator. [Slightly more complicated]

Use the WeChat Mini Program: 腾讯身份验证器. [Simple, anyone can do it, recommended]

Open the WeChat Mini Program and search for: 腾讯身份验证器

After completing the steps above, you will see the interface below, which means your registration was successful. Copy the key to Cherry Studio, and you can start using it happily.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio not only integrates mainstream AI model services but also gives you powerful customization capabilities. With the Custom AI Provider feature, you can easily connect to any AI model you need.

Flexibility: No longer limited to the preset list of providers, you are free to choose the AI model that best suits your needs.

Diversity: Experiment with AI models from various platforms to discover their unique advantages.

Controllability: Directly manage your API keys and access addresses to ensure security and privacy.

Customization: Integrate privately deployed models to meet the needs of specific business scenarios.

You can add your custom AI provider in Cherry Studio in just a few simple steps:

Open Settings: In the left navigation bar of the Cherry Studio interface, click "Settings" (the gear icon).

Go to Model Services: On the settings page, select the "Model Services" tab.

Add Provider: On the "Model Services" page, you will see a list of existing providers. Click the "+ Add" button below the list to open the "Add Provider" pop-up window.

Fill in Information: In the pop-up window, you need to fill in the following information:

Provider Name: Give your custom provider an easily recognizable name (e.g., MyCustomOpenAI).

Provider Type: Select your provider type from the drop-down list. Currently supported types are:

OpenAI

Gemini

Anthropic

Azure OpenAI

Save Configuration: After filling in the information, click the "Add" button to save your configuration.

After adding a provider, you need to find it in the list and configure its details:

Enable Status: On the far right of the custom provider list, there is an enable switch. Turning it on enables this custom service.

API Key:

Fill in the API Key provided by your AI service provider.

Click the "Check" button on the right to verify the key's validity.

API Address:

Fill in the API access address (Base URL) for the AI service.

Be sure to refer to the official documentation provided by your AI service provider to get the correct API address.

Model Management:

Click the "+ Add" button to manually add the model IDs you want to use under this provider, such as gpt-3.5-turbo, gemini-pro, etc.

If you are unsure of the specific model names, please refer to the official documentation provided by your AI service provider.

Click the "Manage" button to edit or delete the models that have been added.

After completing the above configuration, you can select your custom AI provider and model in the Cherry Studio chat interface and start conversing with the AI!

vLLM is a fast and easy-to-use LLM inference library, similar to Ollama. Here are the steps to integrate vLLM into Cherry Studio:

Start the vLLM Service: Start the service using the OpenAI-compatible interface provided by vLLM. There are two main ways to do this:

Start using vllm.entrypoints.openai.api_server

Start using uvicorn

Ensure the service starts successfully and listens on the default port 8000. Of course, you can also specify the port number for the vLLM service using the --port parameter.

Add vLLM Provider in Cherry Studio:

Follow the steps described earlier to add a new custom AI provider in Cherry Studio.

Provider Name: vLLM

Provider Type: Select OpenAI.

Configure vLLM Provider:

API Key: Since vLLM does not require an API key, you can leave this field blank or fill in any content.

API Address: Fill in the API address of the vLLM service. By default, the address is: http://localhost:8000/ (if you use a different port, please modify it accordingly).

Model Management: Add the model name you loaded in vLLM. In the example python -m vllm.entrypoints.openai.api_server --model gpt2 above, you should enter gpt2 here.

Start Chatting: Now, you can select the vLLM provider and the gpt2 model in Cherry Studio and start chatting with the vLLM-powered LLM!

Read the Documentation Carefully: Before adding a custom provider, be sure to carefully read the official documentation of the AI service provider you are using to understand key information such as API keys, access addresses, and model names.

Check the API Key: Use the "Check" button to quickly verify the validity of the API key to avoid issues caused by an incorrect key.

Pay Attention to the API Address: The API address may vary for different AI service providers and models. Be sure to fill in the correct address.

Add Models On-Demand: Please only add the models you will actually use to avoid adding too many unnecessary models.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio's data storage follows system specifications, and data is automatically placed in the user's directory. The specific directory locations are as follows:

macOS: /Users/username/Library/Application Support/CherryStudioDev

Windows: C:\Users\username\AppData\Roaming\CherryStudio

Linux: /home/username/.config/CherryStudio

You can also check the location here:

Method 1:

This can be achieved by creating a symbolic link. Exit the application, move the data to your desired location, and then create a link at the original location pointing to the new location.

Method 2: Based on the characteristics of Electron applications, you can modify the storage location by configuring launch parameters.

--user-data-dir e.g.: Cherry-Studio-*-x64-portable.exe --user-data-dir="%user_data_dir%"

Example:

init_cherry_studio.bat (encoding: ANSI)

Directory structure of user-data-dir after initialization:

This document was translated from Chinese by AI and has not yet been reviewed.

Tokens are the basic units that AI models use to process text. You can think of them as the smallest unit of "thought" for the model. They are not exactly equivalent to characters or words as we understand them, but rather a special way the model segments text.

1. Chinese Tokenization

A Chinese character is usually encoded as 1-2 tokens.

For example: "你好" ≈ 2-4 tokens

2. English Tokenization

Common words are usually 1 token.

Longer or less common words are broken down into multiple tokens.

For example:

"hello" = 1 token

"indescribable" = 4 tokens

3. Special Characters

Spaces, punctuation marks, etc., also consume tokens.

A newline character is usually 1 token.

A Tokenizer is the tool an AI model uses to convert text into tokens. It determines how to split the input text into the smallest units that the model can understand.

1. Different Training Data

Different corpora lead to different optimization directions.

Varying degrees of multilingual support.

Specialized optimizations for specific domains (e.g., medical, legal).

2. Different Tokenization Algorithms

BPE (Byte Pair Encoding) - OpenAI GPT series

WordPiece - Google BERT

SentencePiece - Suitable for multilingual scenarios

3. Different Optimization Goals

Some focus on compression efficiency.

Some focus on semantic preservation.

Some focus on processing speed.

The same text may have a different number of tokens in different models:

Basic Concept: An embedding model is a technique that converts high-dimensional discrete data (text, images, etc.) into low-dimensional continuous vectors. This transformation allows machines to better understand and process complex data. Imagine it as simplifying a complex puzzle into a simple coordinate point that still retains the key features of the puzzle. In the large model ecosystem, it acts as a "translator," converting human-understandable information into a numerical form that AI can compute.

How it Works: Taking natural language processing as an example, an embedding model can map words to specific positions in a vector space. In this space, words with similar meanings will automatically cluster together. For example:

The vectors for "king" and "queen" will be very close.

Pet-related words like "cat" and "dog" will also be near each other.

Words with unrelated meanings, like "car" and "bread," will be far apart.

Main Application Scenarios:

Text analysis: document classification, sentiment analysis

Recommendation systems: personalized content recommendations

Image processing: similar image retrieval

Search engines: semantic search optimization

Core Advantages:

Dimensionality Reduction: Simplifies complex data into easy-to-process vector form.

Semantic Preservation: Retains key semantic information from the original data.

Computational Efficiency: Significantly improves the training and inference efficiency of machine learning models.

Technical Value: Embedding models are fundamental components of modern AI systems. They provide high-quality data representations for machine learning tasks and are a key technology driving progress in fields like natural language processing and computer vision.

Basic Workflow:

Knowledge Base Preprocessing Stage

Split documents into appropriately sized chunks.

Use an embedding model to convert each chunk into a vector.

Store the vectors and the original text in a vector database.

Query Processing Stage

Convert the user's question into a vector.

Retrieve similar content from the vector database.

Provide the retrieved relevant content to the LLM as context.

MCP is an open-source protocol designed to provide contextual information to Large Language Models (LLMs) in a standardized way.

Analogy: You can think of MCP as the "USB drive" of the AI world. We know that a USB drive can store various files and be used directly after being plugged into a computer. Similarly, various "plugins" that provide context can be "plugged" into an MCP Server. An LLM can request these plugins from the MCP Server as needed to obtain richer contextual information and enhance its capabilities.

Comparison with Function Tools: Traditional Function Tools can also provide external functionalities for LLMs, but MCP is more like a higher-dimensional abstraction. A Function Tool is more of a tool for specific tasks, whereas MCP provides a more general, modular mechanism for acquiring context.

Standardization: MCP provides a unified interface and data format, allowing different LLMs and context providers to collaborate seamlessly.

Modularity: MCP allows developers to break down contextual information into independent modules (plugins), making them easier to manage and reuse.

Flexibility: LLMs can dynamically select the required context plugins based on their needs, enabling more intelligent and personalized interactions.

Extensibility: MCP's design supports the future addition of more types of context plugins, offering limitless possibilities for expanding the capabilities of LLMs.

This document was translated from Chinese by AI and has not yet been reviewed.

Knowledge base document preprocessing requires upgrading Cherry Studio to v1.5.0 or higher.

After clicking 'Get API KEY', the application URL will open in your browser. Click 'Apply Now', fill out the form to get the API KEY, and then enter it into the API KEY field.

Configure the created knowledge base as shown above to complete the knowledge base document preprocessing setup.

You can check the knowledge base results by using the search in the upper right corner.

Knowledge Base Tips: When using a more capable model, you can change the knowledge base search mode to intent recognition. Intent recognition can describe your questions more accurately and broadly.

You can also synchronize data across multiple devices by following the process: Computer A WebDAV Computer B.

Install vLLM: Install vLLM by following the official vLLM documentation ().

For specific steps, please refer to:

For more theme variables, please refer to the source code:

Cherry Studio Theme Library:

Share some Chinese-style Cherry Studio theme skins:

This document was translated from Chinese by AI and has not yet been reviewed.

By using or distributing any part or element of the Cherry Studio Materials, you will be deemed to have acknowledged and accepted the content of this Agreement, which shall become effective immediately.

This Cherry Studio License Agreement (hereinafter referred to as the “Agreement”) shall mean the terms and conditions for use, reproduction, distribution, and modification of the Materials as defined by this Agreement.

“We” (or “Us”) shall mean Shanghai Qianhui Technology Co., Ltd.

“You” (or “Your”) shall mean a natural person or legal entity exercising the rights granted by this Agreement, and/or using the Materials for any purpose and in any field of use.

“Third Party” shall mean an individual or legal entity that does not have common control with either Us or You.

“Cherry Studio” shall mean this software suite, including but not limited to [e.g., core libraries, editors, plugins, sample projects], as well as source code, documentation, sample code, and other elements of the foregoing distributed by Us. (Please describe in detail according to the actual composition of Cherry Studio)

“Materials” shall collectively refer to the proprietary Cherry Studio and documentation (and any part thereof) of Shanghai Qianhui Technology Co., Ltd., provided under this Agreement.

“Source” form shall mean the preferred form for making modifications, including but not limited to source code, documentation source files, and configuration files.

“Object” form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types.

“Commercial Use” means for the purpose of direct or indirect commercial gain or commercial advantage, including but not limited to sales, licensing, subscriptions, advertising, marketing, training, consulting services, etc.

“Modification” means any change, adjustment, derivation, or secondary development of the Source form of the Materials, including but not limited to modifying the application name, logo, code, functionality, interface, etc.

Free Commercial Use (Limited to Unmodified Code): We hereby grant You a non-exclusive, worldwide, non-transferable, royalty-free license, under the intellectual property or other rights owned by Us or embodied in the Materials, to use, reproduce, distribute, copy, and distribute the unmodified Materials, including for Commercial Use, subject to the terms and conditions of this Agreement.

Commercial License (When Required): When the conditions described in Section III “Commercial License” are met, you must obtain an explicit written commercial license from Us to exercise the rights under this Agreement.

In any of the following situations, you must contact Us and obtain an explicit written commercial license before you can continue to use the Cherry Studio Materials:

Modification and Derivation: You modify the Cherry Studio Materials or develop derivatives based on them (including but not limited to modifying the application name, logo, code, functionality, interface, etc.).

Enterprise Services: Providing services based on Cherry Studio within your enterprise or to enterprise customers, where such service supports 10 or more cumulative users.

Hardware Bundling: You pre-install or integrate Cherry Studio into hardware devices or products for bundled sales.

Large-Scale Procurement by Government or Educational Institutions: Your use case is part of a large-scale procurement project by a government or educational institution, especially when it involves sensitive requirements such as security and data privacy.

Public-Facing Cloud Services: Providing public-facing cloud services based on Cherry Studio.

You may distribute copies of the unmodified Materials, or provide them as part of a product or service that includes the unmodified Materials, in Source or Object form, provided that You meet the following conditions:

You must provide a copy of this Agreement to any other recipient of the Materials;

You must, in all copies of the Materials that you distribute, retain the following attribution notice and place it in a “NOTICE” or similar text file distributed as part of such copies: `"Cherry Studio is licensed under the Cherry Studio LICENSE AGREEMENT, Copyright (c) 上海千彗科技有限公司. All Rights Reserved."` (Cherry Studio is licensed under the Cherry Studio License Agreement, Copyright (c) 上海千彗科技有限公司. All rights reserved.)

The Materials may be subject to export controls or restrictions. You shall comply with applicable laws and regulations when using the Materials.

If You use the Materials or any of their outputs or results to create, train, fine-tune, or improve software or models that will be distributed or provided, We encourage You to prominently display “Built with Cherry Studio” or “Powered by Cherry Studio” in the relevant product documentation.

We retain all intellectual property rights in and to the Materials and derivative works made by or for Us. Subject to the terms and conditions of this Agreement, the ownership of intellectual property rights for modifications and derivative works of the Materials made by You will be stipulated in a specific commercial license agreement. Without obtaining a commercial license, You do not own the rights to your modifications and derivative works of the Materials, and their intellectual property rights remain with Us.

No trademark license is granted to use Our trade names, trademarks, service marks, or product names, except as required for reasonable and customary use in describing and redistributing the Materials or as required to fulfill the notice obligations under this Agreement.

If You initiate a lawsuit or other legal proceeding (including a counterclaim or cross-claim in a lawsuit) against Us or any entity, alleging that the Materials or any of its outputs, or any portion of the foregoing, infringes any intellectual property or other rights owned or licensable by You, then all licenses granted to You under this Agreement shall terminate as of the date such lawsuit or other legal proceeding is initiated or filed.

We have no obligation to support, update, provide training for, or develop any further versions of the Cherry Studio Materials, nor to grant any related licenses.

The Materials are provided "as is" without any warranty of any kind, either express or implied, including warranties of merchantability, non-infringement, or fitness for a particular purpose. We make no warranty and assume no responsibility for the security or stability of the Materials and their outputs.

In no event shall We be liable to You for any damages, including but not limited to any direct, indirect, special, or consequential damages, arising out of your use or inability to use the Materials or any of their outputs, however caused.

You will defend, indemnify, and hold Us harmless from any claims by any third party arising out of or related to your use or distribution of the Materials.

The term of this Agreement shall commence upon your acceptance of this Agreement or your access to the Materials and will continue in full force and effect until terminated in accordance with the terms and conditions of this Agreement.

We may terminate this Agreement if You breach any of its terms or conditions. Upon termination of this Agreement, You must cease using the Materials. Section VII, Section IX, and "II. Contributor Agreement" shall survive the termination of this Agreement.

This Agreement and any dispute arising from or related to this Agreement shall be governed by the laws of China.

The Shanghai People's Court shall have exclusive jurisdiction over any dispute arising from this Agreement.

数据设置→Obsidian配置

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio supports integration with Obsidian, allowing you to export entire conversations or single messages to your Obsidian vault.

This process does not require installing any additional Obsidian plugins. However, since Cherry Studio's import mechanism is similar to the Obsidian Web Clipper, it's recommended to upgrade Obsidian to the latest version (at least greater than 1.7.2) to avoid import failures with long conversations.

Open Cherry Studio's Settings → Data Settings → Obsidian Configuration menu. The dropdown will automatically list the Obsidian vaults that have been opened on your machine. Select your target Obsidian vault:

Exporting an Entire Conversation

Go back to the conversation interface in Cherry Studio, right-click on the conversation, select Export, and click Export to Obsidian:

A window will pop up, allowing you to adjust the Properties, the folder location in Obsidian, and the handling method for the exported note:

Vault: Click the dropdown menu to select other Obsidian vaults

Path: Click the dropdown menu to select the folder where the exported note will be stored

As Obsidian note properties (Properties):

Tags (tags)

Creation time (created)

Source (source)

There are three handling methods for exporting to Obsidian:

There are three handling methods for exporting to Obsidian:

Create new (overwrite if exists): Creates a new note in the folder specified in the Path. If a note with the same name already exists, it will be overwritten.

Prepend: If a note with the same name exists, the selected conversation content will be prepended to the beginning of that note.

Append: If a note with the same name exists, the selected conversation content will be appended to the end of that note.

After selecting all options, click OK to export the entire conversation to the corresponding folder in the specified Obsidian vault.

Exporting a Single Message

To export a single message, click the three-bar menu below the message, select Export, and click Export to Obsidian:

A window similar to the one for exporting an entire conversation will appear, asking you to configure the note properties and handling method. Follow the tutorial above to complete the process.

🎉 Congratulations! You have now completed all the configurations for integrating Cherry Studio with Obsidian and have gone through the entire export process. Enjoy!

Open your Obsidian vault and create a folder to save the exported conversations (the example in the image uses a folder named "Cherry Studio"):

Take note of the text in the bottom-left corner; this is your vault name.

In Cherry Studio's Settings → Data Settings → Obsidian Configuration menu, enter the vault name and folder name you noted in Step 1:

The Global Tags field is optional. You can set tags that will be applied to all exported conversations in Obsidian. Fill it in as needed.

Exporting an Entire Conversation

Go back to the conversation interface in Cherry Studio, right-click on the conversation, select Export, and click Export to Obsidian.

A window will pop up, allowing you to adjust the Properties for the exported note and the handling method. There are three handling methods for exporting to Obsidian:

Create new (overwrite if exists): Creates a new note in the folder you specified in Step 2. If a note with the same name already exists, it will be overwritten.

Prepend: If a note with the same name exists, the selected conversation content will be prepended to the beginning of that note.

Append: If a note with the same name exists, the selected conversation content will be appended to the end of that note.

Exporting a Single Message

To export a single message, click the three-bar menu below the message, select Export, and click Export to Obsidian.

A window similar to the one for exporting an entire conversation will appear, asking you to configure the note properties and handling method. Follow the tutorial above to complete the process.

🎉 Congratulations! You have now completed all the configurations for integrating Cherry Studio with Obsidian and have gone through the entire export process. Enjoy!

This document was translated from Chinese by AI and has not yet been reviewed.

Before obtaining a Gemini API key, you need to have a Google Cloud project (if you already have one, you can skip this process).

Go to Google Cloud to create a project, fill in the project name, and click Create Project.

On the official API Key page, click Create API key.

Copy the generated key and open the Provider Settings in CherryStudio.

Find the Gemini provider and paste the key you just obtained.

Click Manage or Add at the bottom, add the supported models, and enable the provider switch in the top right corner to start using it.

Monaspace

English Font Commercial Use

GitHub has launched an open-source font family called Monaspace, which offers five styles: Neon (modern), Argon (humanist), Xenon (serif), Radon (handwriting), and Krypton (mechanical).

MiSans Global

Multilingual Commercial Use

MiSans Global is a global font customization project led by Xiaomi, in collaboration with Monotype and Hanyi Fonts.

This is a vast font family, covering over 20 writing systems and supporting more than 600 languages.

This document was translated from Chinese by AI and has not yet been reviewed.

Cherry Studio's translation feature provides you with fast and accurate text translation services, supporting mutual translation between multiple languages.

The translation interface mainly consists of the following parts:

Source Language Selection Area:

Any Language: Cherry Studio will automatically detect the source language and translate it.

Target Language Selection Area:

Dropdown Menu: Select the language you want to translate the text into.

Settings Button:

Clicking it will take you to the Default Model Settings.

Scroll Sync:

Click to toggle scroll sync (scrolling on one side will also scroll the other side).

Text Input Box (Left):

Enter or paste the text you need to translate.

Translation Result Box (Right):

Displays the translated text.

Copy Button: Click the button to copy the translation result to the clipboard.

Translate Button:

Click this button to start the translation.

Translation History (Top Left):

Click to view the translation history.

Select the Target Language:

In the target language selection area, choose the language you want to translate into.

Enter or Paste Text:

Enter or paste the text you want to translate into the text input box on the left.

Start Translation:

Click the Translate button.

View and Copy the Result:

The translation result will be displayed in the result box on the right.

Click the copy button to copy the translation result to the clipboard.